概述 注:本文基于Android 9.0源码,为了文章的简洁性,引用源码的地方可能有所删减。

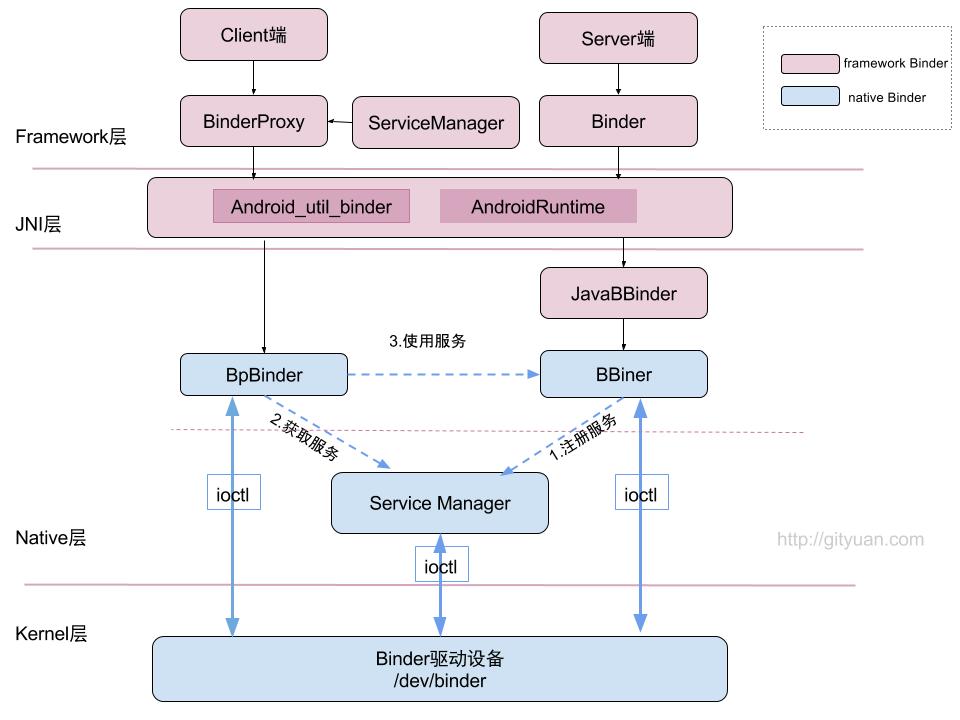

在Framework层也有一套Binder的通信架构,它的实现最终是通过Native层结合Binder驱动来完成的。

初始化 startReg 在Android系统开机过程中,Zygote进程启动时会进行虚拟机注册,该过程调用AndroidRuntime::startReg方法来完成jni方法的注册:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 static const RegJNIRec gRegJNI[] = { REG_JNI(register_com_android_internal_os_RuntimeInit), REG_JNI(register_com_android_internal_os_ZygoteInit_nativeZygoteInit), REG_JNI(register_android_os_SystemClock), REG_JNI(register_android_os_Binder), }; int AndroidRuntime::startReg (JNIEnv* env) if (register_jni_procs(gRegJNI, NELEM(gRegJNI), env) < 0 ) { return -1 ; } return 0 ; } int register_android_os_Binder (JNIEnv* env) if (int_register_android_os_Binder(env) < 0 ) return -1 ; if (int_register_android_os_BinderInternal(env) < 0 ) return -1 ; if (int_register_android_os_BinderProxy(env) < 0 ) return -1 ; jclass clazz = FindClassOrDie(env, "android/util/Log" ); gLogOffsets.mClass = MakeGlobalRefOrDie(env, clazz); gLogOffsets.mLogE = GetStaticMethodIDOrDie(env, clazz, "e" , "(Ljava/lang/String;Ljava/lang/String;Ljava/lang/Throwable;)I" ); clazz = FindClassOrDie(env, "android/os/ParcelFileDescriptor" ); gParcelFileDescriptorOffsets.mClass = MakeGlobalRefOrDie(env, clazz); gParcelFileDescriptorOffsets.mConstructor = GetMethodIDOrDie(env, clazz, "<init>" , "(Ljava/io/FileDescriptor;)V" ); clazz = FindClassOrDie(env, "android/os/StrictMode" ); gStrictModeCallbackOffsets.mClass = MakeGlobalRefOrDie(env, clazz); gStrictModeCallbackOffsets.mCallback = GetStaticMethodIDOrDie(env, clazz, "onBinderStrictModePolicyChange" , "(I)V" ); clazz = FindClassOrDie(env, "java/lang/Thread" ); gThreadDispatchOffsets.mClass = MakeGlobalRefOrDie(env, clazz); gThreadDispatchOffsets.mDispatchUncaughtException = GetMethodIDOrDie(env, clazz, "dispatchUncaughtException" , "(Ljava/lang/Throwable;)V" ); gThreadDispatchOffsets.mCurrentThread = GetStaticMethodIDOrDie(env, clazz, "currentThread" , "()Ljava/lang/Thread;" ); return 0 ; }

涉及到的相关方法如下:

FindClassOrDie(env, kBinderPathName)相当于env->FindClass(kBinderPathName)MakeGlobalRefOrDie()相当于env->NewGlobalRef()GetMethodIDOrDie()相当于env->GetMethodID()GetFieldIDOrDie()相当于env->GeFieldID()RegisterMethodsOrDie()相当于Android::registerNativeMethods()

注册Binder类 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 static struct bindernative_offsets_t { jclass mClass; jmethodID mExecTransact; jfieldID mObject; } gBinderOffsets; static const JNINativeMethod gBinderMethods[] = { { "getCallingPid" , "()I" , (void *)android_os_Binder_getCallingPid }, { "getCallingUid" , "()I" , (void *)android_os_Binder_getCallingUid }, { "clearCallingIdentity" , "()J" , (void *)android_os_Binder_clearCallingIdentity }, { "restoreCallingIdentity" , "(J)V" , (void *)android_os_Binder_restoreCallingIdentity }, { "setThreadStrictModePolicy" , "(I)V" , (void *)android_os_Binder_setThreadStrictModePolicy }, { "getThreadStrictModePolicy" , "()I" , (void *)android_os_Binder_getThreadStrictModePolicy }, { "flushPendingCommands" , "()V" , (void *)android_os_Binder_flushPendingCommands }, { "getNativeBBinderHolder" , "()J" , (void *)android_os_Binder_getNativeBBinderHolder }, { "getNativeFinalizer" , "()J" , (void *)android_os_Binder_getNativeFinalizer }, { "blockUntilThreadAvailable" , "()V" , (void *)android_os_Binder_blockUntilThreadAvailable } }; const char * const kBinderPathName = "android/os/Binder" ;static int int_register_android_os_Binder (JNIEnv* env) jclass clazz = FindClassOrDie(env, kBinderPathName); gBinderOffsets.mClass = MakeGlobalRefOrDie(env, clazz); gBinderOffsets.mExecTransact = GetMethodIDOrDie(env, clazz, "execTransact" , "(IJJI)Z" ); gBinderOffsets.mObject = GetFieldIDOrDie(env, clazz, "mObject" , "J" ); return RegisterMethodsOrDie(env, kBinderPathName, gBinderMethods, NELEM(gBinderMethods)); }

int_register_android_os_Binder方法的主要功能:

通过gBinderOffsets,保存Java层Binder类的信息,为JNI层访问Java层提供通道;

通过RegisterMethodsOrDie,将gBinderMethods数组完成映射关系,从而为Java层访问JNI层提供通道。也就是说该过程建立了Binder类在Native层与framework层之间的相互调用的桥梁。

注册BinderInternal类 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 static struct binderinternal_offsets_t { jclass mClass; jmethodID mForceGc; jmethodID mProxyLimitCallback; } gBinderInternalOffsets; static const JNINativeMethod gBinderInternalMethods[] = { { "getContextObject" , "()Landroid/os/IBinder;" , (void *)android_os_BinderInternal_getContextObject }, { "joinThreadPool" , "()V" , (void *)android_os_BinderInternal_joinThreadPool }, { "disableBackgroundScheduling" , "(Z)V" , (void *)android_os_BinderInternal_disableBackgroundScheduling }, { "setMaxThreads" , "(I)V" , (void *)android_os_BinderInternal_setMaxThreads }, { "handleGc" , "()V" , (void *)android_os_BinderInternal_handleGc }, { "nSetBinderProxyCountEnabled" , "(Z)V" , (void *)android_os_BinderInternal_setBinderProxyCountEnabled }, { "nGetBinderProxyPerUidCounts" , "()Landroid/util/SparseIntArray;" , (void *)android_os_BinderInternal_getBinderProxyPerUidCounts }, { "nGetBinderProxyCount" , "(I)I" , (void *)android_os_BinderInternal_getBinderProxyCount }, { "nSetBinderProxyCountWatermarks" , "(II)V" , (void *)android_os_BinderInternal_setBinderProxyCountWatermarks} }; const char * const kBinderInternalPathName = "com/android/internal/os/BinderInternal" ;static int int_register_android_os_BinderInternal (JNIEnv* env) jclass clazz = FindClassOrDie(env, kBinderInternalPathName); gBinderInternalOffsets.mClass = MakeGlobalRefOrDie(env, clazz); gBinderInternalOffsets.mForceGc = GetStaticMethodIDOrDie(env, clazz, "forceBinderGc" , "()V" ); gBinderInternalOffsets.mProxyLimitCallback = GetStaticMethodIDOrDie(env, clazz, "binderProxyLimitCallbackFromNative" , "(I)V" ); jclass SparseIntArrayClass = FindClassOrDie(env, "android/util/SparseIntArray" ); gSparseIntArrayOffsets.classObject = MakeGlobalRefOrDie(env, SparseIntArrayClass); gSparseIntArrayOffsets.constructor = GetMethodIDOrDie(env, gSparseIntArrayOffsets.classObject, "<init>" , "()V" ); gSparseIntArrayOffsets.put = GetMethodIDOrDie(env, gSparseIntArrayOffsets.classObject, "put" , "(II)V" ); BpBinder::setLimitCallback(android_os_BinderInternal_proxyLimitcallback); return RegisterMethodsOrDie(env, kBinderInternalPathName, gBinderInternalMethods, NELEM(gBinderInternalMethods)); }

注册BinderProxy类 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 static struct error_offsets_t { jclass mClass; } gErrorOffsets; static struct binderproxy_offsets_t { jclass mClass; jmethodID mGetInstance; jmethodID mSendDeathNotice; jmethodID mDumpProxyDebugInfo; jfieldID mNativeData; } gBinderProxyOffsets; static struct class_offsets_t { jmethodID mGetName; } gClassOffsets; static const JNINativeMethod gBinderProxyMethods[] = { {"pingBinder" , "()Z" , (void *)android_os_BinderProxy_pingBinder}, {"isBinderAlive" , "()Z" , (void *)android_os_BinderProxy_isBinderAlive}, {"getInterfaceDescriptor" , "()Ljava/lang/String;" , (void *)android_os_BinderProxy_getInterfaceDescriptor}, {"transactNative" , "(ILandroid/os/Parcel;Landroid/os/Parcel;I)Z" , (void *)android_os_BinderProxy_transact}, {"linkToDeath" , "(Landroid/os/IBinder$DeathRecipient;I)V" , (void *)android_os_BinderProxy_linkToDeath}, {"unlinkToDeath" , "(Landroid/os/IBinder$DeathRecipient;I)Z" , (void *)android_os_BinderProxy_unlinkToDeath}, {"getNativeFinalizer" , "()J" , (void *)android_os_BinderProxy_getNativeFinalizer}, }; const char * const kBinderProxyPathName = "android/os/BinderProxy" ;static int int_register_android_os_BinderProxy (JNIEnv* env) jclass clazz = FindClassOrDie(env, "java/lang/Error" ); gErrorOffsets.mClass = MakeGlobalRefOrDie(env, clazz); clazz = FindClassOrDie(env, kBinderProxyPathName); gBinderProxyOffsets.mClass = MakeGlobalRefOrDie(env, clazz); gBinderProxyOffsets.mGetInstance = GetStaticMethodIDOrDie(env, clazz, "getInstance" , "(JJ)Landroid/os/BinderProxy;" ); gBinderProxyOffsets.mSendDeathNotice = GetStaticMethodIDOrDie(env, clazz, "sendDeathNotice" , "(Landroid/os/IBinder$DeathRecipient;)V" ); gBinderProxyOffsets.mDumpProxyDebugInfo = GetStaticMethodIDOrDie(env, clazz, "dumpProxyDebugInfo" , "()V" ); gBinderProxyOffsets.mNativeData = GetFieldIDOrDie(env, clazz, "mNativeData" , "J" ); clazz = FindClassOrDie(env, "java/lang/Class" ); gClassOffsets.mGetName = GetMethodIDOrDie(env, clazz, "getName" , "()Ljava/lang/String;" ); return RegisterMethodsOrDie(env, kBinderProxyPathName, gBinderProxyMethods, NELEM(gBinderProxyMethods)); }

注册服务 ServiceManager.addService 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 public static void addService (String name, IBinder service, boolean allowIsolated, int dumpPriority) try { getIServiceManager().addService(name, service, allowIsolated, dumpPriority); } catch (RemoteException e) { Log.e(TAG, "error in addService" , e); } } private static IServiceManager getIServiceManager () if (sServiceManager != null ) { return sServiceManager; } sServiceManager = ServiceManagerNative.asInterface(Binder.allowBlocking(BinderInternal.getContextObject())); return sServiceManager; } static jobject android_os_BinderInternal_getContextObject (JNIEnv* env, jobject clazz) sp<IBinder> b = ProcessState::self()->getContextObject(NULL); return javaObjectForIBinder(env, b); } jobject javaObjectForIBinder (JNIEnv* env, const sp<IBinder>& val) if (val == NULL) return NULL; BinderProxyNativeData* nativeData = gNativeDataCache; if (nativeData == nullptr) { nativeData = new BinderProxyNativeData(); } jobject object = env->CallStaticObjectMethod(gBinderProxyOffsets.mClass, gBinderProxyOffsets.mGetInstance, (jlong) nativeData, (jlong) val.get()); if (env->ExceptionCheck()) { gNativeDataCache = nullptr; return NULL; } BinderProxyNativeData* actualNativeData = getBPNativeData(env, object); if (actualNativeData == nativeData) { nativeData->mOrgue = new DeathRecipientList; nativeData->mObject = val; gNativeDataCache = nullptr; ++gNumProxies; if (gNumProxies >= gProxiesWarned + PROXY_WARN_INTERVAL) { ALOGW("Unexpectedly many live BinderProxies: %d\n" , gNumProxies); gProxiesWarned = gNumProxies; } } else { gNativeDataCache = nativeData; } return object; } BinderProxyNativeData* getBPNativeData(JNIEnv* env, jobject obj) { return (BinderProxyNativeData *) env->GetLongField(obj, gBinderProxyOffsets.mNativeData); } private static BinderProxy getInstance (long nativeData, long iBinder) BinderProxy result; try { result = sProxyMap.get(iBinder); if (result != null ) { return result; } result = new BinderProxy(nativeData); } catch (Throwable e) { throw e; } sProxyMap.set(iBinder, result); return result; } private BinderProxy (long nativeData) mNativeData = nativeData; } public static IBinder allowBlocking (IBinder binder) try { if (binder instanceof BinderProxy) { ((BinderProxy) binder).mWarnOnBlocking = false ; } else if (binder != null && binder.getInterfaceDescriptor() != null && binder.queryLocalInterface(binder.getInterfaceDescriptor()) == null ) { Log.w(TAG, "Unable to allow blocking on interface " + binder); } } catch (RemoteException ignored) { } return binder; } public abstract class ServiceManagerNative extends Binder implements IServiceManager static public IServiceManager asInterface (IBinder obj) { if (obj == null ) { return null ; } IServiceManager in = (IServiceManager)obj.queryLocalInterface(descriptor); if (in != null ) { return in; } return new ServiceManagerProxy(obj); } } public IInterface queryLocalInterface (String descriptor) return null ; } public class Binder implements IBinder public void attachInterface (@Nullable IInterface owner, @Nullable String descriptor) mOwner = owner; mDescriptor = descriptor; } public @Nullable IInterface queryLocalInterface (@NonNull String descriptor) { if (mDescriptor != null && mDescriptor.equals(descriptor)) { return mOwner; } return null ; } } class ServiceManagerProxy implements IServiceManager public ServiceManagerProxy (IBinder remote) mRemote = remote; } public IBinder asBinder () return mRemote; } }

由上可知,ServiceManagerNative.asInterface(Binder.allowBlocking(BinderInternal.getContextObject()))等价于new ServiceManagerProxy(new BinderProxy(nativeData)),其中nativeData->mObject = new BpBinder(0),BpBinder作为Binder代理端,指向Native层的Service Manager。

Framework层的ServiceManager的实际工作交给了ServiceManagerProxy的成员变量BinderProxy,而BinderProxy通过JNI方式最终会调用BpBinder对象,因此可知上层Binder架构的核心功能依赖于Native架构的服务来完成。

ServiceManagerProxy.addService 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 class ServiceManagerProxy implements IServiceManager public ServiceManagerProxy (IBinder remote) mRemote = remote; } public IBinder asBinder () return mRemote; } public void addService (String name, IBinder service, boolean allowIsolated, int dumpPriority) throws RemoteException { Parcel data = Parcel.obtain(); Parcel reply = Parcel.obtain(); data.writeInterfaceToken(IServiceManager.descriptor); data.writeString(name); data.writeStrongBinder(service); data.writeInt(allowIsolated ? 1 : 0 ); data.writeInt(dumpPriority); mRemote.transact(ADD_SERVICE_TRANSACTION, data, reply, 0 ); reply.recycle(); data.recycle(); } } public final void writeStrongBinder (IBinder val) nativeWriteStrongBinder(mNativePtr, val); } static void android_os_Parcel_writeStrongBinder (JNIEnv* env, jclass clazz, jlong nativePtr, jobject object) Parcel* parcel = reinterpret_cast<Parcel*>(nativePtr); if (parcel != NULL) { const status_t err = parcel->writeStrongBinder(ibinderForJavaObject(env, object)); if (err != NO_ERROR) { signalExceptionForError(env, clazz, err); } } } sp<IBinder> ibinderForJavaObject (JNIEnv* env, jobject obj) if (obj == NULL) return NULL; if (env->IsInstanceOf(obj, gBinderOffsets.mClass)) { JavaBBinderHolder* jbh = (JavaBBinderHolder*) env->GetLongField(obj, gBinderOffsets.mObject); return jbh->get(env, obj); } if (env->IsInstanceOf(obj, gBinderProxyOffsets.mClass)) { return getBPNativeData(env, obj)->mObject; } return NULL; } class JavaBBinderHolder public : sp<JavaBBinder> get (JNIEnv* env, jobject obj) { AutoMutex _l (mLock) ; sp<JavaBBinder> b = mBinder.promote(); if (b == NULL) { b = new JavaBBinder(env, obj); mBinder = b; } return b; } } class JavaBBinder : public BBinder { }

因此可知data.writeStrongBinder(service)最终等价于parcel->writeStrongBinder(new JavaBBinder(env, obj)),至于parcel->writeStrongBinder方法,在前面的Native层解析中已经给出。

BinderProxy.transact 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 public boolean transact (int code, Parcel data, Parcel reply, int flags) throws RemoteException Binder.checkParcel(this , code, data, "Unreasonably large binder buffer" ); return transactNative(code, data, reply, flags); } static jboolean android_os_BinderProxy_transact (JNIEnv* env, jobject obj, jint code, jobject dataObj, jobject replyObj, jint flags) Parcel* data = parcelForJavaObject(env, dataObj); Parcel* reply = parcelForJavaObject(env, replyObj); IBinder* target = getBPNativeData(env, obj)->mObject.get(); if (target == NULL) { jniThrowException(env, "java/lang/IllegalStateException" , "Binder has been finalized!" ); return JNI_FALSE; } status_t err = target->transact(code, *data, reply, flags); return JNI_FALSE; }

Java层的BinderProxy.transact()最终交由Native层的BpBinder::transact()完成,具体实现过程在之前的Native解析中已经给出。

小结 ServiceManager.addService注册服务过程实际上是通过Native层来具体实现注册逻辑,通过BpBinder(0)来发送ADD_SERVICE_TRANSACTION命令,与Binder驱动进行数据交互,最终由service_manager进程来完成注册服务的功能。

获取服务 ServiceManager.getService 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 public static IBinder getService (String name) try { IBinder service = sCache.get(name); if (service != null ) { return service; } else { return Binder.allowBlocking(rawGetService(name)); } } catch (RemoteException e) { Log.e(TAG, "error in getService" , e); } return null ; } private static IBinder rawGetService (String name) throws RemoteException final IBinder binder = getIServiceManager().getService(name); return binder; }

请求获取服务过程中,先从缓存中查询是否存在,如果缓存中不存在的话,再通过binder交互来查询相应的服务,getIServiceManager返回的是ServiceManagerProxy对象。

ServiceManagerProxy.getService 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 class ServiceManagerProxy implements IServiceManager public ServiceManagerProxy (IBinder remote) mRemote = remote; } public IBinder asBinder () return mRemote; } public IBinder getService (String name) throws RemoteException Parcel data = Parcel.obtain(); Parcel reply = Parcel.obtain(); data.writeInterfaceToken(IServiceManager.descriptor); data.writeString(name); mRemote.transact(GET_SERVICE_TRANSACTION, data, reply, 0 ); IBinder binder = reply.readStrongBinder(); reply.recycle(); data.recycle(); return binder; } }

BinderProxy.transact 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 public boolean transact (int code, Parcel data, Parcel reply, int flags) throws RemoteException Binder.checkParcel(this , code, data, "Unreasonably large binder buffer" ); return transactNative(code, data, reply, flags); } static jboolean android_os_BinderProxy_transact (JNIEnv* env, jobject obj, jint code, jobject dataObj, jobject replyObj, jint flags) Parcel* data = parcelForJavaObject(env, dataObj); Parcel* reply = parcelForJavaObject(env, replyObj); IBinder* target = getBPNativeData(env, obj)->mObject.get(); if (target == NULL) { jniThrowException(env, "java/lang/IllegalStateException" , "Binder has been finalized!" ); return JNI_FALSE; } status_t err = target->transact(code, *data, reply, flags); return JNI_FALSE; }

跟注册服务时一样,Java层的BinderProxy.transact()最终交由Native层的BpBinder::transact()完成,然后从reply中读取Binder对象。

Parcel.readStrongBinder 1 2 3 4 5 6 7 8 9 10 11 12 13 public final IBinder readStrongBinder () return nativeReadStrongBinder(mNativePtr); } static jobject android_os_Parcel_readStrongBinder (JNIEnv* env, jclass clazz, jlong nativePtr) Parcel* parcel = reinterpret_cast<Parcel*>(nativePtr); if (parcel != NULL) { return javaObjectForIBinder(env, parcel->readStrongBinder()); } return NULL; }

readStrongBinder方法过程基本上是writeStrongBinder的逆过程。经过parcel->readStrongBinder()方法,最终创建了指向Binder服务端的BpBinder代理对象,然后经过javaObjectForIBinder将Native层BpBinder对象转换为Java层BinderProxy对象,也就是说通过getService()最终获取了指向目标Binder服务端的代理对象BinderProxy。

小结 ServiceManager.getService获取服务过程实际上是通过Native层来具体实现注册逻辑,通过BpBinder(0)来发送GET_SERVICE_TRANSACTION命令,与Binder驱动进行数据交互,最终由service_manager进程来完成获取服务的功能。获取之后会创建BinderProxy对象,并将BpBinder对象的地址保存到BinderProxy对象中。

死亡通知 Framework:linkToDeath/unlinkToDeath 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 service.linkToDeath(new IBinder.DeathRecipient() { @Override public void binderDied () Log.d(TAG, "died..." ); } }, 0 ); public class Binder implements IBinder public void linkToDeath (@NonNull DeathRecipient recipient, int flags) } public boolean unlinkToDeath (@NonNull DeathRecipient recipient, int flags) return true ; } } final class BinderProxy implements IBinder public native void linkToDeath (DeathRecipient recipient, int flags) throws RemoteException public native boolean unlinkToDeath (DeathRecipient recipient, int flags) }

BinderProxy调用linkToDeath()/unlinkToDeath()方法是一个native方法:android_os_BinderProxy_linkToDeath/android_os_BinderProxy_unlinkToDeath。

linkToDeath Native:linkToDeath android_os_BinderProxy_linkToDeath 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 static void android_os_BinderProxy_linkToDeath (JNIEnv* env, jobject obj, jobject recipient, jint flags) if (recipient == NULL ) { jniThrowNullPointerException(env, NULL ); return ; } BinderProxyNativeData *nd = getBPNativeData(env, obj); IBinder* target = nd->mObject.get(); if (!target->localBinder()) { DeathRecipientList* list = nd->mOrgue.get(); sp<JavaDeathRecipient> jdr = new JavaDeathRecipient(env, recipient, list ); status_t err = target->linkToDeath(jdr, NULL , flags); if (err != NO_ERROR) { jdr->clearReference(); signalExceptionForError(env, obj, err, true ); } } } class JavaDeathRecipient :public IBinder::DeathRecipient{ public : JavaDeathRecipient(JNIEnv* env, jobject object, const sp<DeathRecipientList>& list ) : mVM(jnienv_to_javavm(env)), mObject(env->NewGlobalRef(object)), mObjectWeak(NULL ), mList(list ) { list ->add(this ); gNumDeathRefsCreated.fetch_add(1 , std ::memory_order_relaxed); gcIfManyNewRefs(env); } void clearReference () { sp<DeathRecipientList> list = mList.promote(); if (list != NULL ) { list ->remove(this ); } } }

android_os_BinderProxy_linkToDeath过程说明:

获取DeathRecipientList:记录该BinderProxy的JavaDeathRecipient列表信息,一个BpBinder可以注册多个死亡回调;

创建JavaDeathRecipient:继承于IBinder::DeathRecipient;

通过BpBinder->linkToDeath方法添加死亡回调;

若BpBinder->linkToDeath失败则清除list引用。

JavaDeathRecipient构造方法主要功能:

通过env->NewGlobalRef(object),为recipient创建相应的全局引用,并保存到mObject成员变量;

将当前对象JavaDeathRecipient的指针sp添加到DeathRecipientList。

BpBinder.linkToDeath 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 status_t BpBinder::linkToDeath ( const sp<DeathRecipient>& recipient, void * cookie, uint32_t flags) Obituary ob; ob.recipient = recipient; ob.cookie = cookie; ob.flags = flags; { AutoMutex _l(mLock); if (!mObitsSent) { if (!mObituaries) { mObituaries = new Vector<Obituary>; if (!mObituaries) { return NO_MEMORY; } getWeakRefs()->incWeak(this ); IPCThreadState* self = IPCThreadState::self(); self->requestDeathNotification(mHandle, this ); self->flushCommands(); } ssize_t res = mObituaries->add(ob); return res >= (ssize_t )NO_ERROR ? (status_t )NO_ERROR : res; } } return DEAD_OBJECT; } status_t IPCThreadState::requestDeathNotification (int32_t handle, BpBinder* proxy) mOut.writeInt32(BC_REQUEST_DEATH_NOTIFICATION); mOut.writeInt32((int32_t )handle); mOut.writePointer((uintptr_t )proxy); return NO_ERROR; } void IPCThreadState::flushCommands () if (mProcess->mDriverFD <= 0 ) return ; talkWithDriver(false ); if (mOut.dataSize() > 0 ) { talkWithDriver(false ); } }

向Kernel层的binder driver发送BC_REQUEST_DEATH_NOTIFICATION命令,经过ioctl执行到binder_ioctl_write_read()方法。

Binder Driver 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 static int binder_thread_write (struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed) while (ptr < end && thread->return_error.cmd == BR_OK) { switch (cmd) { case BC_REQUEST_DEATH_NOTIFICATION: case BC_CLEAR_DEATH_NOTIFICATION: { uint32_t target; binder_uintptr_t cookie; struct binder_ref *ref ; struct binder_ref_death *death = NULL ; if (get_user(target, (uint32_t __user *)ptr)) return -EFAULT; ptr += sizeof (uint32_t ); if (get_user(cookie, (binder_uintptr_t __user *)ptr)) return -EFAULT; ptr += sizeof (binder_uintptr_t ); if (cmd == BC_REQUEST_DEATH_NOTIFICATION) { death = kzalloc(sizeof (*death), GFP_KERNEL); if (death == NULL ) { break ; } } binder_proc_lock(proc); ref = binder_get_ref_olocked(proc, target, false ); binder_node_lock(ref->node); if (cmd == BC_REQUEST_DEATH_NOTIFICATION) { if (ref->death) { break ; } binder_stats_created(BINDER_STAT_DEATH); INIT_LIST_HEAD(&death->work.entry); death->cookie = cookie; ref->death = death; if (ref->node->proc == NULL ) { ref->death->work.type = BINDER_WORK_DEAD_BINDER; binder_inner_proc_lock(proc); binder_enqueue_work_ilocked(&ref->death->work, &proc->todo); binder_wakeup_proc_ilocked(proc); binder_inner_proc_unlock(proc); } } else { } binder_node_unlock(ref->node); binder_proc_unlock(proc); } break ; } } }

在处理BC_REQUEST_DEATH_NOTIFICATION过程时如果目标Binder服务所在进程已死,则向todo队列增加BINDER_WORK_DEAD_BINDER事务,直接发送死亡通知,这属于非常规情况。更常见的场景是Binder服务所在进程死亡后,会调用binder_release方法,然后调用binder_node_release,这个过程便会发出死亡通知的回调。

触发死亡通知:binder_release 当Binder服务所在进程死亡后,会释放进程相关的资源,当binder的fd被释放后,此处调用相应的方法是binder_release()。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 const struct file_operations binder_fops = { .owner = THIS_MODULE, .poll = binder_poll, .unlocked_ioctl = binder_ioctl, .compat_ioctl = binder_ioctl, .mmap = binder_mmap, .open = binder_open, .flush = binder_flush, .release = binder_release, }; static int binder_release (struct inode *nodp, struct file *filp) struct binder_proc *proc = filp ->private_data ; debugfs_remove(proc->debugfs_entry); if (proc->binderfs_entry) { binderfs_remove_file(proc->binderfs_entry); proc->binderfs_entry = NULL ; } binder_defer_work(proc, BINDER_DEFERRED_RELEASE); return 0 ; } static void binder_defer_work (struct binder_proc *proc, enum binder_deferred_state defer) mutex_lock(&binder_deferred_lock); proc->deferred_work |= defer; if (hlist_unhashed(&proc->deferred_work_node)) { hlist_add_head(&proc->deferred_work_node, &binder_deferred_list); schedule_work(&binder_deferred_work); } mutex_unlock(&binder_deferred_lock); } static DECLARE_WORK (binder_deferred_work, binder_deferred_func) #define DECLARE_WORK(n, f) struct work_struct n = __WORK_INITIALIZER(n, f) #define __WORK_INITIALIZER(n, f) { \ .data = WORK_DATA_STATIC_INIT(), \ .entry = { &(n).entry, &(n).entry }, \ .func = (f), \ __WORK_INIT_LOCKDEP_MAP(#n, &(n)) \ } static void binder_deferred_func(struct work_struct *work){ struct binder_proc *proc; struct files_struct *files ; int defer; do { mutex_lock(&binder_deferred_lock); if (!hlist_empty(&binder_deferred_list)) { proc = hlist_entry(binder_deferred_list.first, struct binder_proc, deferred_work_node); hlist_del_init(&proc->deferred_work_node); defer = proc->deferred_work; proc->deferred_work = 0 ; } else { proc = NULL ; defer = 0 ; } mutex_unlock(&binder_deferred_lock); files = NULL ; if (defer & BINDER_DEFERRED_PUT_FILES) { mutex_lock(&proc->files_lock); files = proc->files; if (files) proc->files = NULL ; mutex_unlock(&proc->files_lock); } if (defer & BINDER_DEFERRED_FLUSH) binder_deferred_flush(proc); if (defer & BINDER_DEFERRED_RELEASE) binder_deferred_release(proc); if (files) put_files_struct(files); } while (proc); }

可见,binder_release最终调用的是binder_deferred_release; 同理,binder_flush最终调用的是binder_deferred_flush。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 static void binder_deferred_release (struct binder_proc *proc) struct binder_context *context = proc ->context ; struct binder_device *device ; struct rb_node *n ; int threads, nodes, incoming_refs, outgoing_refs, active_transactions; BUG_ON(proc->files); mutex_lock(&binder_procs_lock); hlist_del(&proc->proc_node); mutex_unlock(&binder_procs_lock); mutex_lock(&context->context_mgr_node_lock); if (context->binder_context_mgr_node && context->binder_context_mgr_node->proc == proc) { context->binder_context_mgr_node = NULL ; } mutex_unlock(&context->context_mgr_node_lock); device = container_of(proc->context, struct binder_device, context); if (refcount_dec_and_test(&device->ref)) { kfree(context->name); kfree(device); } proc->context = NULL ; binder_inner_proc_lock(proc); proc->tmp_ref++; proc->is_dead = true ; threads = 0 ; active_transactions = 0 ; while ((n = rb_first(&proc->threads))) { struct binder_thread *thread ; thread = rb_entry(n, struct binder_thread, rb_node); binder_inner_proc_unlock(proc); threads++; active_transactions += binder_thread_release(proc, thread); binder_inner_proc_lock(proc); } nodes = 0 ; incoming_refs = 0 ; while ((n = rb_first(&proc->nodes))) { struct binder_node *node ; node = rb_entry(n, struct binder_node, rb_node); nodes++; binder_inc_node_tmpref_ilocked(node); rb_erase(&node->rb_node, &proc->nodes); binder_inner_proc_unlock(proc); incoming_refs = binder_node_release(node, incoming_refs); binder_inner_proc_lock(proc); } binder_inner_proc_unlock(proc); outgoing_refs = 0 ; binder_proc_lock(proc); while ((n = rb_first(&proc->refs_by_desc))) { struct binder_ref *ref ; ref = rb_entry(n, struct binder_ref, rb_node_desc); outgoing_refs++; binder_cleanup_ref_olocked(ref); binder_proc_unlock(proc); binder_free_ref(ref); binder_proc_lock(proc); } binder_proc_unlock(proc); binder_release_work(proc, &proc->todo); binder_release_work(proc, &proc->delivered_death); binder_proc_dec_tmpref(proc); }

主要看一下binder_node_release方法:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 static int binder_node_release (struct binder_node *node, int refs) struct binder_ref *ref ; int death = 0 ; struct binder_proc *proc = node ->proc ; binder_release_work(proc, &node->async_todo); binder_node_lock(node); binder_inner_proc_lock(proc); binder_dequeue_work_ilocked(&node->work); BUG_ON(!node->tmp_refs); if (hlist_empty(&node->refs) && node->tmp_refs == 1 ) { binder_inner_proc_unlock(proc); binder_node_unlock(node); binder_free_node(node); return refs; } node->proc = NULL ; node->local_strong_refs = 0 ; node->local_weak_refs = 0 ; binder_inner_proc_unlock(proc); spin_lock(&binder_dead_nodes_lock); hlist_add_head(&node->dead_node, &binder_dead_nodes); spin_unlock(&binder_dead_nodes_lock); hlist_for_each_entry(ref, &node->refs, node_entry) { refs++; binder_inner_proc_lock(ref->proc); if (!ref->death) { binder_inner_proc_unlock(ref->proc); continue ; } death++; ref->death->work.type = BINDER_WORK_DEAD_BINDER; binder_enqueue_work_ilocked(&ref->death->work, &ref->proc->todo); binder_wakeup_proc_ilocked(ref->proc); binder_inner_proc_unlock(ref->proc); } binder_node_unlock(node); binder_put_node(node); return refs; }

该方法会遍历该binder_node所有的binder_ref,当存在binder死亡通知时,则向相应的binder_ref所在进程的todo队列添加BINDER_WORK_DEAD_BINDER事务并唤醒处于等待状态的binder线程。

处理死亡通知 Binder Driver:binder_thread_read 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 static int binder_thread_read (struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed, int non_block) while (1 ) { switch (w->type) { case BINDER_WORK_DEAD_BINDER: case BINDER_WORK_DEAD_BINDER_AND_CLEAR: case BINDER_WORK_CLEAR_DEATH_NOTIFICATION: { struct binder_ref_death *death ; uint32_t cmd; binder_uintptr_t cookie; death = container_of(w, struct binder_ref_death, work); if (w->type == BINDER_WORK_CLEAR_DEATH_NOTIFICATION) cmd = BR_CLEAR_DEATH_NOTIFICATION_DONE; else cmd = BR_DEAD_BINDER; cookie = death->cookie; if (w->type == BINDER_WORK_CLEAR_DEATH_NOTIFICATION) { binder_inner_proc_unlock(proc); kfree(death); binder_stats_deleted(BINDER_STAT_DEATH); } else { binder_enqueue_work_ilocked(w, &proc->delivered_death); binder_inner_proc_unlock(proc); } if (put_user(cmd, (uint32_t __user *)ptr)) return -EFAULT; ptr += sizeof (uint32_t ); if (put_user(cookie, (binder_uintptr_t __user *)ptr)) return -EFAULT; ptr += sizeof (binder_uintptr_t ); binder_stat_br(proc, thread, cmd); if (cmd == BR_DEAD_BINDER) goto done; } break ; } } }

当Binder驱动收到BINDER_WORK_DEAD_BINDER时,将命令BR_DEAD_BINDER写到用户空间。

IPCThreadState.executeCommand 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 status_t IPCThreadState::executeCommand (int32_t cmd) BBinder* obj; RefBase::weakref_type* refs; status_t result = NO_ERROR; switch ((uint32_t )cmd) { case BR_DEAD_BINDER: { BpBinder *proxy = (BpBinder*)mIn.readPointer(); proxy->sendObituary(); mOut.writeInt32(BC_DEAD_BINDER_DONE); mOut.writePointer((uintptr_t )proxy); } break ; default : printf ("*** BAD COMMAND %d received from Binder driver\n" , cmd); result = UNKNOWN_ERROR; break ; } return result; } void BpBinder::sendObituary () mAlive = 0 ; if (mObitsSent) return ; mLock.lock(); Vector<Obituary>* obits = mObituaries; if (obits != NULL ) { IPCThreadState* self = IPCThreadState::self(); self->clearDeathNotification(mHandle, this ); self->flushCommands(); mObituaries = NULL ; } mObitsSent = 1 ; mLock.unlock(); if (obits != NULL ) { const size_t N = obits->size(); for (size_t i=0 ; i<N; i++) { reportOneDeath(obits->itemAt(i)); } delete obits; } } void BpBinder::reportOneDeath (const Obituary& obit) sp<DeathRecipient> recipient = obit.recipient.promote(); if (recipient == NULL ) return ; recipient->binderDied(this ); }

unlinkToDeath Native:unlinkToDeath android_os_BinderProxy_unlinkToDeath 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 static jboolean android_os_BinderProxy_unlinkToDeath (JNIEnv* env, jobject obj, jobject recipient, jint flags) jboolean res = JNI_FALSE; if (recipient == NULL ) { jniThrowNullPointerException(env, NULL ); return res; } BinderProxyNativeData* nd = getBPNativeData(env, obj); IBinder* target = nd->mObject.get(); if (target == NULL ) { return JNI_FALSE; } if (!target->localBinder()) { status_t err = NAME_NOT_FOUND; DeathRecipientList* list = nd->mOrgue.get(); sp<JavaDeathRecipient> origJDR = list ->find(recipient); if (origJDR != NULL ) { wp<IBinder::DeathRecipient> dr; err = target->unlinkToDeath(origJDR, NULL , flags, &dr); if (err == NO_ERROR && dr != NULL ) { sp<IBinder::DeathRecipient> sdr = dr.promote(); JavaDeathRecipient* jdr = static_cast <JavaDeathRecipient*>(sdr.get()); if (jdr != NULL ) { jdr->clearReference(); } } } } return res; }

BpBinder.unlinkToDeath 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 status_t BpBinder::unlinkToDeath ( const wp<DeathRecipient>& recipient, void * cookie, uint32_t flags, wp<DeathRecipient>* outRecipient) AutoMutex _l(mLock); if (mObitsSent) { return DEAD_OBJECT; } const size_t N = mObituaries ? mObituaries->size() : 0 ; for (size_t i=0 ; i<N; i++) { const Obituary& obit = mObituaries->itemAt(i); if ((obit.recipient == recipient || (recipient == NULL && obit.cookie == cookie)) && obit.flags == flags) { if (outRecipient != NULL ) { *outRecipient = mObituaries->itemAt(i).recipient; } mObituaries->removeAt(i); if (mObituaries->size() == 0 ) { IPCThreadState* self = IPCThreadState::self(); self->clearDeathNotification(mHandle, this ); self->flushCommands(); delete mObituaries; mObituaries = NULL ; } return NO_ERROR; } } return NAME_NOT_FOUND; } status_t IPCThreadState::clearDeathNotification (int32_t handle, BpBinder* proxy) mOut.writeInt32(BC_CLEAR_DEATH_NOTIFICATION); mOut.writeInt32((int32_t )handle); mOut.writePointer((uintptr_t )proxy); return NO_ERROR; }

写入BC_CLEAR_DEATH_NOTIFICATION命令,再经过flushCommands(),则进入Kernel层。

Binder Driver 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 static int binder_thread_write (struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed) while (ptr < end && thread->return_error.cmd == BR_OK) { switch (cmd) { case BC_REQUEST_DEATH_NOTIFICATION: case BC_CLEAR_DEATH_NOTIFICATION: { uint32_t target; binder_uintptr_t cookie; struct binder_ref *ref ; struct binder_ref_death *death = NULL ; if (get_user(target, (uint32_t __user *)ptr)) return -EFAULT; ptr += sizeof (uint32_t ); if (get_user(cookie, (binder_uintptr_t __user *)ptr)) return -EFAULT; ptr += sizeof (binder_uintptr_t ); binder_proc_lock(proc); ref = binder_get_ref_olocked(proc, target, false ); binder_node_lock(ref->node); if (cmd == BC_REQUEST_DEATH_NOTIFICATION) { } else { if (ref->death == NULL ) { binder_node_unlock(ref->node); binder_proc_unlock(proc); break ; } death = ref->death; if (death->cookie != cookie) { binder_node_unlock(ref->node); binder_proc_unlock(proc); break ; } ref->death = NULL ; binder_inner_proc_lock(proc); if (list_empty(&death->work.entry)) { death->work.type = BINDER_WORK_CLEAR_DEATH_NOTIFICATION; if (thread->looper & (BINDER_LOOPER_STATE_REGISTERED | BINDER_LOOPER_STATE_ENTERED)) binder_enqueue_thread_work_ilocked(thread, &death->work); else { binder_enqueue_work_ilocked(&death->work, &proc->todo); binder_wakeup_proc_ilocked(proc); } } else { BUG_ON(death->work.type != BINDER_WORK_DEAD_BINDER); death->work.type = BINDER_WORK_DEAD_BINDER_AND_CLEAR; } binder_inner_proc_unlock(proc); } binder_node_unlock(ref->node); binder_proc_unlock(proc); } break ; } } } static int binder_thread_read (struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed, int non_block) while (1 ) { switch (w->type) { case BINDER_WORK_DEAD_BINDER: case BINDER_WORK_DEAD_BINDER_AND_CLEAR: case BINDER_WORK_CLEAR_DEATH_NOTIFICATION: { struct binder_ref_death *death ; uint32_t cmd; binder_uintptr_t cookie; death = container_of(w, struct binder_ref_death, work); if (w->type == BINDER_WORK_CLEAR_DEATH_NOTIFICATION) cmd = BR_CLEAR_DEATH_NOTIFICATION_DONE; else cmd = BR_DEAD_BINDER; cookie = death->cookie; if (w->type == BINDER_WORK_CLEAR_DEATH_NOTIFICATION) { binder_inner_proc_unlock(proc); kfree(death); binder_stats_deleted(BINDER_STAT_DEATH); } else { binder_enqueue_work_ilocked(w, &proc->delivered_death); binder_inner_proc_unlock(proc); } if (put_user(cmd, (uint32_t __user *)ptr)) return -EFAULT; ptr += sizeof (uint32_t ); if (put_user(cookie, (binder_uintptr_t __user *)ptr)) return -EFAULT; ptr += sizeof (binder_uintptr_t ); binder_stat_br(proc, thread, cmd); if (cmd == BR_DEAD_BINDER) goto done; } break ; } } }

IPCThreadState.executeCommand 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 status_t IPCThreadState::executeCommand (int32_t cmd) BBinder* obj; RefBase::weakref_type* refs; status_t result = NO_ERROR; switch ((uint32_t )cmd) { case BR_CLEAR_DEATH_NOTIFICATION_DONE: { BpBinder *proxy = (BpBinder*)mIn.readPointer(); proxy->getWeakRefs()->decWeak(proxy); } break ; default : printf ("*** BAD COMMAND %d received from Binder driver\n" , cmd); result = UNKNOWN_ERROR; break ; } return result; }

小结 对于Binder IPC进程都会打开/dev/binder文件,当进程异常退出时,Binder驱动会调用/dev/binder文件所对应的release回调函数,执行清理工作,并且检查BBinder是否有注册死亡通知,当发现存在死亡通知时,那么就向其对应的BpBinder端发送死亡通知消息。死亡回调DeathRecipient只有Bp才能正确使用,因为DeathRecipient用于监控Bn端挂掉的情况,如果Bn建立跟自己的死亡通知,自己进程都挂了,也就无法通知。

linkToDeath过程:

requestDeathNotification过程向驱动传递的命令BC_REQUEST_DEATH_NOTIFICATION,参数有mHandle和BpBinder对象;

binder_thread_write()过程,同一个BpBinder可以注册多个死亡回调,但Kernel只允许注册一次死亡通知;

注册死亡回调的过程,实质就是向binder_ref结构体添加binder_ref_death指针,binder_ref_death的cookie记录BpBinder指针。

触发死亡回调:

服务实体进程:binder_release过程会执行binder_node_release(),循环该binder_node下所有的ref->death对象。当存在,则将BINDER_WORK_DEAD_BINDER事务添加ref->proc->todo(即ref所在进程的todo队列);

引用所在进程:执行binder_thread_read()过程,向用户空间写入BR_DEAD_BINDER,并触发死亡回调;

发送死亡通知sendObituary。

unlinkToDeath过程:

unlinkToDeath只有当该BpBinder的所有mObituaries都被移除,才会向驱动层执行清除死亡通知的动作,否则只是从Native层移除某个recipient;

clearDeathNotification过程向驱动传递BC_CLEAR_DEATH_NOTIFICATION,参数有mHandle和BpBinder对象;

binder_thread_write()过程,将BINDER_WORK_CLEAR_DEATH_NOTIFICATION事务添加当前当前进程/线程的todo队列,并将ref->death置为NULL;

binder_thread_read()过程,向用户空间写入BR_CLEAR_DEATH_NOTIFICATION_DONE。

clearCallingIdentity/restoreCallingIdentity 相关源码如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 public static final native long clearCallingIdentity () public static final native void restoreCallingIdentity (long token) static jlong android_os_Binder_clearCallingIdentity (JNIEnv* env, jobject clazz) return IPCThreadState::self()->clearCallingIdentity(); } static void android_os_Binder_restoreCallingIdentity (JNIEnv* env, jobject clazz, jlong token) int uid = (int )(token>>32 ); if (uid > 0 && uid < 999 ) { char buf[128 ]; sprintf(buf, "Restoring bad calling ident: 0x%" PRIx64, token); jniThrowException(env, "java/lang/IllegalStateException" , buf); return ; } IPCThreadState::self()->restoreCallingIdentity(token); } int64_t IPCThreadState::clearCallingIdentity() { int64_t token = ((int64_t)mCallingUid<<32 ) | mCallingPid; clearCaller(); return token; } void IPCThreadState::clearCaller(){ mCallingPid = getpid(); mCallingUid = getuid(); } void IPCThreadState::restoreCallingIdentity(int64_t token){ mCallingUid = (int )(token>>32 ); mCallingPid = (int )token; }

这两个方法涉及到两个属性:

mCallingUid(记为UID),保存Binder IPC通信的调用方进程的Uid

mCallingPid(记为PID),保存Binder IPC通信的调用方进程的Pid

由上面的源码可知:

clearCallingIdentity作用是清空远程调用端的UID和PID,用当前本地进程的UID和PID替代

restoreCallingIdentity作用是恢复远程调用端的UID和PID信息,及clearCallingIdentity的反过程

场景及作用:进程A通过Binder远程调用进程B的服务,在此过程中,进程B又通过Binder调用了当前进程的另一个服务。

进程A通过Binder远程调用进程B的服务:进程B的IPCThreadState中的mCallingUid和mCallingPid保存的是进程A的UID和PID。这时进程B中调用Binder.getCallingPid()和Binder.getCallingUid()方法便可获取进程A的UID和PID,然后利用UID和PID进行权限比对,判断进程A是否有权限调用进程B的某个方法。

进程B通过Binder调用了当前进程的另一个服务:此时进程B是它另一个服务的调用端,则mCallingUid和mCallingPid应该保存当前进程B的PID和UID,故需要调用clearCallingIdentity()方法完成这个功能。当进程B调用完某个组件,由于进程B仍然处于进程A的服务端,因此mCallingUid和mCallingPid需要恢复成进程A的UID和PID,通过调用restoreCallingIdentity()即可。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 status_t IPCThreadState::executeCommand (int32_t cmd) switch (cmd) { ... case BR_TRANSACTION: { binder_transaction_data tr; result = mIn.read(&tr, sizeof (tr)); const pid_t origPid = mCallingPid; const uid_t origUid = mCallingUid; mCallingPid = tr.sender_pid; mCallingUid = tr.sender_euid; if (tr.target.ptr) { sp<BBinder> b ((BBinder*)tr.cookie) ; const status_t error = b->transact(tr.code, buffer, &reply, tr.flags); } mCallingPid = origPid; mCallingUid = origUid; } } }

总结 在Framework层,Binder采用JNI技术来调用Native层的Binder架构,从而为上层应用程序提供服务。在Native层中Binder是C/S架构,分为Bn端(Server)和Bp端(Client),对于Java层在命名与架构上也比较类似,借用网上的一张图:

Framework层的ServiceManager类的实现最终是通过BinderProxy传递给Native层来完成的。