概述 注:本文基于Android 9.0源码,为了文章的简洁性,引用源码的地方可能有所删减。

ServiceManager是Binder IPC通信过程中的守护进程,本身也是一个Binder服务,但并没有采用libbinder中的多线程模型来与Binder驱动通信,而是自行编写了binder.c直接和Binder驱动来通信,并且只有一个循环binder_loop来进行读取和处理事务,这样的好处是简单而高效。

ServiceManager本身工作相对简单,其功能:查询和注册服务。对于Binder IPC通信过程中,其实更多的情形是BpBinder和BBinder之间的通信,比如ActivityManagerProxy和ActivityManagerService之间的通信等。

ioctl ioctl函数是设备驱动程序中对设备的I/O通道进行管理的函数,它用来对设备的一些特性进行控制,例如串口的传输波特率、马达的转速等等。它的参数通常包含一个文件描述符fd或者文件指针等,这是用户程序打开设备时使用open函数返回的,还有一个cmd参数是用户程序对设备的控制命令,在其后会根据不同的cmd有不同的参数。

启动ServiceManager 概述 ServiceManager是由init进程通过解析init.rc文件而创建的,其所对应的可执行程序/system/bin/servicemanager,所对应的源文件是service_manager.c,进程名为/system/bin/servicemanager。

service_manager.main 源文件位置:frameworks/native/cmds/servicemanager/service_manager.c

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 int main (int argc, char ** argv) struct binder_state *bs ; union selinux_callback cb; char *driver; if (argc > 1 ) { driver = argv[1 ]; } else { driver = "/dev/binder" ; } bs = binder_open(driver, 128 *1024 ); if (!bs) { return -1 ; } if (binder_become_context_manager(bs)) { ALOGE("cannot become context manager (%s)\n" , strerror(errno)); return -1 ; } binder_loop(bs, svcmgr_handler); return 0 ; }

binder_open 源文件位置:frameworks/native/cmds/servicemanager/binder.c

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 struct binder_state { int fd; void *mapped; size_t mapsize; }; struct binder_state *binder_open (const char * driver, size_t mapsize) struct binder_state *bs ; struct binder_version vers ; bs = malloc (sizeof (*bs)); if (!bs) { errno = ENOMEM; return NULL ; } bs->fd = open(driver, O_RDWR | O_CLOEXEC); if (bs->fd < 0 ) { fprintf (stderr ,"binder: cannot open %s (%s)\n" , driver, strerror(errno)); goto fail_open; } if ((ioctl(bs->fd, BINDER_VERSION, &vers) == -1 ) || (vers.protocol_version != BINDER_CURRENT_PROTOCOL_VERSION)) { fprintf (stderr , "binder: kernel driver version (%d) differs from user space version (%d)\n" , vers.protocol_version, BINDER_CURRENT_PROTOCOL_VERSION); goto fail_open; } bs->mapsize = mapsize; bs->mapped = mmap(NULL , mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0 ); if (bs->mapped == MAP_FAILED) { fprintf (stderr ,"binder: cannot map device (%s)\n" , strerror(errno)); goto fail_map; } return bs; fail_map: close(bs->fd); fail_open: free (bs); return NULL ; }

打开binder驱动相关操作:

先调用open()打开binder设备,open()方法经过系统调用,进入Binder驱动,然后调用binder驱动的binder_open()方法,该方法会在Binder驱动层创建一个binder_proc对象,再将binder_proc对象赋值给fd->private_data,同时放入全局链表binder_procs。

然后通过ioctl()检验当前binder版本与Binder驱动层的版本是否一致。

调用mmap()进行内存映射,同理mmap()方法经过系统调用,对应于Binder驱动层的binder_mmap()方法。

binder_become_context_manager 1 2 3 4 5 int binder_become_context_manager (struct binder_state *bs) return ioctl(bs->fd, BINDER_SET_CONTEXT_MGR, 0 ); }

binder_loop 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 void binder_loop (struct binder_state *bs, binder_handler func) int res; struct binder_write_read bwr ; uint32_t readbuf[32 ]; bwr.write_size = 0 ; bwr.write_consumed = 0 ; bwr.write_buffer = 0 ; readbuf[0 ] = BC_ENTER_LOOPER; binder_write(bs, readbuf, sizeof (uint32_t )); for (;;) { bwr.read_size = sizeof (readbuf); bwr.read_consumed = 0 ; bwr.read_buffer = (uintptr_t ) readbuf; res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr); if (res < 0 ) { ALOGE("binder_loop: ioctl failed (%s)\n" , strerror(errno)); break ; } res = binder_parse(bs, 0 , (uintptr_t ) readbuf, bwr.read_consumed, func); if (res == 0 ) { ALOGE("binder_loop: unexpected reply?!\n" ); break ; } if (res < 0 ) { ALOGE("binder_loop: io error %d %s\n" , res, strerror(errno)); break ; } } } int binder_write (struct binder_state *bs, void *data, size_t len) struct binder_write_read bwr ; int res; bwr.write_size = len; bwr.write_consumed = 0 ; bwr.write_buffer = (uintptr_t ) data; bwr.read_size = 0 ; bwr.read_consumed = 0 ; bwr.read_buffer = 0 ; res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr); if (res < 0 ) { fprintf (stderr ,"binder_write: ioctl failed (%s)\n" , strerror(errno)); } return res; }

binder_write函数只有write_buffer有数据,因此会进入Binder驱动的进入binder_thread_write()方法方法。

1 2 3 4 5 6 7 8 9 10 11 static int binder_thread_write (struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed) case BC_ENTER_LOOPER: thread->looper |= BINDER_LOOPER_STATE_ENTERED; break ; }

binder_parse 接下来进入for循环,执行ioctl(),此时bwr只有read_buffer有数据,那么进入binder_thread_read()方法。读出数据后,再调用binder_parse方法解析。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 int binder_parse (struct binder_state *bs, struct binder_io *bio, uintptr_t ptr, size_t size, binder_handler func) int r = 1 ; uintptr_t end = ptr + (uintptr_t ) size; while (ptr < end) { uint32_t cmd = *(uint32_t *) ptr; ptr += sizeof (uint32_t ); switch (cmd) { case BR_NOOP: break ; case BR_TRANSACTION_COMPLETE: break ; case BR_INCREFS: case BR_ACQUIRE: case BR_RELEASE: case BR_DECREFS: ptr += sizeof (struct binder_ptr_cookie); break ; case BR_TRANSACTION: { struct binder_transaction_data *txn = (struct binder_transaction_data *) ptr ; binder_dump_txn(txn); if (func) { unsigned rdata[256 /4 ]; struct binder_io msg ; struct binder_io reply ; int res; bio_init(&reply, rdata, sizeof (rdata), 4 ); bio_init_from_txn(&msg, txn); res = func(bs, txn, &msg, &reply); if (txn->flags & TF_ONE_WAY) { binder_free_buffer(bs, txn->data.ptr.buffer); } else { binder_send_reply(bs, &reply, txn->data.ptr.buffer, res); } } ptr += sizeof (*txn); break ; } case BR_REPLY: case BR_DEAD_BINDER: { struct binder_death *death = (struct binder_death *)(uintptr_t ) *(binder_uintptr_t *)ptr ; ptr += sizeof (binder_uintptr_t ); death->func(bs, death->ptr); break ; } case BR_FAILED_REPLY: r = -1 ; break ; case BR_DEAD_REPLY: r = -1 ; break ; default : ALOGE("parse: OOPS %d\n" , cmd); return -1 ; } } } return r; }

svcmgr_handler 该方法的功能:查询服务,注册服务,以及列举所有服务。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 struct svcinfo { struct svcinfo *next ; uint32_t handle; struct binder_death death ; int allow_isolated; size_t len; uint16_t name[0 ]; }; int svcmgr_handler (struct binder_state *bs, struct binder_transaction_data *txn, struct binder_io *msg, struct binder_io *reply) struct svcinfo *si ; uint16_t *s; size_t len; uint32_t handle; uint32_t strict_policy; int allow_isolated; uint32_t dumpsys_priority; s = bio_get_string16(msg, &len); if (s == NULL ) { return -1 ; } switch (txn->code) { case SVC_MGR_GET_SERVICE: case SVC_MGR_CHECK_SERVICE: s = bio_get_string16(msg, &len); if (s == NULL ) { return -1 ; } handle = do_find_service(s, len, txn->sender_euid, txn->sender_pid); if (!handle) break ; bio_put_ref(reply, handle); return 0 ; case SVC_MGR_ADD_SERVICE: s = bio_get_string16(msg, &len); if (s == NULL ) { return -1 ; } handle = bio_get_ref(msg); allow_isolated = bio_get_uint32(msg) ? 1 : 0 ; dumpsys_priority = bio_get_uint32(msg); if (do_add_service(bs, s, len, handle, txn->sender_euid, allow_isolated, dumpsys_priority, txn->sender_pid)) return -1 ; break ; case SVC_MGR_LIST_SERVICES: { uint32_t n = bio_get_uint32(msg); uint32_t req_dumpsys_priority = bio_get_uint32(msg); if (!svc_can_list(txn->sender_pid, txn->sender_euid)) { ALOGE("list_service() uid=%d - PERMISSION DENIED\n" , txn->sender_euid); return -1 ; } si = svclist; while (si) { if (si->dumpsys_priority & req_dumpsys_priority) { if (n == 0 ) break ; n--; } si = si->next; } if (si) { bio_put_string16(reply, si->name); return 0 ; } return -1 ; } default : ALOGE("unknown code %d\n" , txn->code); return -1 ; } } bio_put_uint32(reply, 0 ); return 0 ; }

核心工作 do_add_service 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 int do_add_service (struct binder_state *bs, const uint16_t *s, size_t len, uint32_t handle, uid_t uid, int allow_isolated, uint32_t dumpsys_priority, pid_t spid) struct svcinfo *si ; if (!handle || (len == 0 ) || (len > 127 )) return -1 ; if (!svc_can_register(s, len, spid, uid)) { ALOGE("add_service('%s',%x) uid=%d - PERMISSION DENIED\n" , str8(s, len), handle, uid); return -1 ; } si = find_svc(s, len); if (si) { if (si->handle) { ALOGE("add_service('%s',%x) uid=%d - ALREADY REGISTERED, OVERRIDE\n" , str8(s, len), handle, uid); svcinfo_death(bs, si); } si->handle = handle; } else { si = malloc (sizeof (*si) + (len + 1 ) * sizeof (uint16_t )); if (!si) { ALOGE("add_service('%s',%x) uid=%d - OUT OF MEMORY\n" , str8(s, len), handle, uid); return -1 ; } si->handle = handle; si->len = len; memcpy (si->name, s, (len + 1 ) * sizeof (uint16_t )); si->name[len] = '\0' ; si->death.func = (void *) svcinfo_death; si->death.ptr = si; si->allow_isolated = allow_isolated; si->dumpsys_priority = dumpsys_priority; si->next = svclist; svclist = si; } binder_acquire(bs, handle); binder_link_to_death(bs, handle, &si->death); return 0 ; }

do_find_service 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 uint32_t do_find_service (const uint16_t *s, size_t len, uid_t uid, pid_t spid) struct svcinfo *si = find_svc (s , len ); if (!si || !si->handle) { return 0 ; } if (!si->allow_isolated) { uid_t appid = uid % AID_USER; if (appid >= AID_ISOLATED_START && appid <= AID_ISOLATED_END) { return 0 ; } } if (!svc_can_find(s, len, spid, uid)) { return 0 ; } return si->handle; }

binder_send_reply 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 void binder_send_reply (struct binder_state *bs, struct binder_io *reply, binder_uintptr_t buffer_to_free, int status) struct { uint32_t cmd_free; binder_uintptr_t buffer; uint32_t cmd_reply; struct binder_transaction_data txn ; } __attribute__((packed)) data; data.cmd_free = BC_FREE_BUFFER; data.buffer = buffer_to_free; data.cmd_reply = BC_REPLY; data.txn.target.ptr = 0 ; data.txn.cookie = 0 ; data.txn.code = 0 ; if (status) { data.txn.flags = TF_STATUS_CODE; data.txn.data_size = sizeof (int ); data.txn.offsets_size = 0 ; data.txn.data.ptr.buffer = (uintptr_t )&status; data.txn.data.ptr.offsets = 0 ; } else { data.txn.flags = 0 ; data.txn.data_size = reply->data - reply->data0; data.txn.offsets_size = ((char *) reply->offs) - ((char *) reply->offs0); data.txn.data.ptr.buffer = (uintptr_t )reply->data0; data.txn.data.ptr.offsets = (uintptr_t )reply->offs0; } binder_write(bs, &data, sizeof (data)); }

小结 ServiceManger集中管理系统内的所有服务,通过权限控制进程是否有权注册服务,通过字符串名称来查找对应的Service;由于ServiceManger进程建立跟所有向其注册服务的死亡通知,那么当服务所在进程死亡后,会只需告知ServiceManager,每个Client通过查询ServiceManager可获取Server进程的情况,降低所有Client进程直接检测会导致负载过重。

ServiceManager启动流程:

打开binder驱动,并调用mmap()方法分配128k的内存映射空间:binder_open();

通知binder驱动使其成为守护进程:binder_become_context_manager();

验证selinux权限,判断进程是否有权注册或查看指定服务;

进入循环状态,等待Client端的请求:binder_loop();

注册服务:根据服务名称,但同一个服务已注册,重新注册前会先移除之前的注册信息;

死亡通知:当binder所在进程死亡后,会调用binder_release方法,然后调用binder_node_release。这个过程便会发出死亡通知的回调。

ServiceManager最核心的两个功能为查询和注册服务:

注册服务:记录服务名和handle信息,保存到svclist列表;

查询服务:根据服务名查询相应的的handle信息。

获取ServiceManager 概述 获取ServiceManager是通过defaultServiceManager()方法来完成,当进程注册服务(addService)或获取服务(getService)的过程之前,都需要先调用defaultServiceManager()方法来获取gDefaultServiceManager对象。对于gDefaultServiceManager对象,如果存在则直接返回;如果不存在则创建该对象,创建过程包括调用open()打开binder驱动设备,利用mmap()映射内核的地址空间。

defaultServiceManager 1 2 3 4 5 6 7 8 9 10 11 12 13 14 sp<IServiceManager> defaultServiceManager () if (gDefaultServiceManager != NULL ) return gDefaultServiceManager; { AutoMutex _l(gDefaultServiceManagerLock); while (gDefaultServiceManager == NULL ) { gDefaultServiceManager = interface_cast<IServiceManager>( ProcessState::self()->getContextObject(NULL )); if (gDefaultServiceManager == NULL ) sleep(1 ); } } return gDefaultServiceManager; }

获取ServiceManager对象采用单例模式,当gDefaultServiceManager存在,则直接返回,否则创建一个新对象,创建过程可分为三个步骤:

ProcessState::self():用于获取ProcessState对象(也是单例模式),每个进程有且只有一个ProcessState对象,存在则直接返回,不存在则创建;getContextObject():用于获取BpBinder对象,对于handle=0的BpBinder对象,存在则直接返回,不存在才创建;interface_cast<IServiceManager>():用于获取BpServiceManager对象。

获取ProcessState对象 1 2 3 4 5 6 7 8 9 sp<ProcessState> ProcessState::self () Mutex::Autolock _l(gProcessMutex); if (gProcess != NULL ) { return gProcess; } gProcess = new ProcessState("/dev/binder" ); return gProcess; }

使用了单例模式,从而保证每一个进程只有一个ProcessState对象:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 ProcessState::ProcessState(const char *driver) : mDriverName(String8(driver)) , mDriverFD(open_driver(driver)) , mVMStart(MAP_FAILED) , mThreadCountLock(PTHREAD_MUTEX_INITIALIZER) , mThreadCountDecrement(PTHREAD_COND_INITIALIZER) , mExecutingThreadsCount(0 ) , mMaxThreads(DEFAULT_MAX_BINDER_THREADS) , mStarvationStartTimeMs(0 ) , mManagesContexts(false ) , mBinderContextCheckFunc(NULL ) , mBinderContextUserData(NULL ) , mThreadPoolStarted(false ) , mThreadPoolSeq(1 ) { if (mDriverFD >= 0 ) { mVMStart = mmap(0 , BINDER_VM_SIZE, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0 ); if (mVMStart == MAP_FAILED) { close(mDriverFD); mDriverFD = -1 ; mDriverName.clear(); } } } static int open_driver (const char *driver) int fd = open(driver, O_RDWR | O_CLOEXEC); if (fd >= 0 ) { int vers = 0 ; status_t result = ioctl(fd, BINDER_VERSION, &vers); if (result == -1 ) { ALOGE("Binder ioctl to obtain version failed: %s" , strerror(errno)); close(fd); fd = -1 ; } if (result != 0 || vers != BINDER_CURRENT_PROTOCOL_VERSION) { ALOGE("Binder driver protocol(%d) does not match user space protocol(%d)! ioctl() return value: %d" , vers, BINDER_CURRENT_PROTOCOL_VERSION, result); close(fd); fd = -1 ; } size_t maxThreads = DEFAULT_MAX_BINDER_THREADS; result = ioctl(fd, BINDER_SET_MAX_THREADS, &maxThreads); if (result == -1 ) { ALOGE("Binder ioctl to set max threads failed: %s" , strerror(errno)); } } else { ALOGW("Opening '%s' failed: %s\n" , driver, strerror(errno)); } return fd; }

由于ProcessState的单例模式的惟一性,因此一个进程只打开binder设备一次,其中ProcessState的成员变量mDriverFD记录binder驱动的fd,用于访问binder设备。

获取BpBinder对象 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 sp<IBinder> ProcessState::getContextObject (const sp<IBinder>& ) return getStrongProxyForHandle(0 ); } sp<IBinder> ProcessState::getStrongProxyForHandle (int32_t handle) sp<IBinder> result; AutoMutex _l(mLock); handle_entry* e = lookupHandleLocked(handle); if (e != NULL ) { IBinder* b = e->binder; if (b == NULL || !e->refs->attemptIncWeak(this )) { if (handle == 0 ) { Parcel data; status_t status = IPCThreadState::self()->transact(0 , IBinder::PING_TRANSACTION, data, NULL , 0 ); if (status == DEAD_OBJECT) return NULL ; } b = BpBinder::create(handle); e->binder = b; if (b) e->refs = b->getWeakRefs(); result = b; } else { result.force_set(b); e->refs->decWeak(this ); } } return result; } BpBinder* BpBinder::create (int32_t handle) { int32_t trackedUid = -1 ; if (sCountByUidEnabled) { trackedUid = IPCThreadState::self()->getCallingUid(); AutoMutex _l(sTrackingLock); uint32_t trackedValue = sTrackingMap[trackedUid]; if (CC_UNLIKELY(trackedValue & LIMIT_REACHED_MASK)) { if (sBinderProxyThrottleCreate) { return nullptr ; } } else { if ((trackedValue & COUNTING_VALUE_MASK) >= sBinderProxyCountHighWatermark) { ALOGE("Too many binder proxy objects sent to uid %d from uid %d (%d proxies held)" , getuid(), trackedUid, trackedValue); sTrackingMap[trackedUid] |= LIMIT_REACHED_MASK; if (sLimitCallback) sLimitCallback(trackedUid); if (sBinderProxyThrottleCreate) { ALOGI("Throttling binder proxy creates from uid %d in uid %d until binder proxy" " count drops below %d" , trackedUid, getuid(), sBinderProxyCountLowWatermark); return nullptr ; } } } sTrackingMap[trackedUid]++; } return new BpBinder(handle, trackedUid); } BpBinder::BpBinder(int32_t handle, int32_t trackedUid) : mHandle(handle) , mAlive(1 ) , mObitsSent(0 ) , mObituaries(NULL ) , mTrackedUid(trackedUid) { extendObjectLifetime(OBJECT_LIFETIME_WEAK); IPCThreadState::self()->incWeakHandle(handle, this ); }

获取BpServiceManager 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 template <typename INTERFACE>inline sp<INTERFACE> interface_cast (const sp<IBinder>& obj) return INTERFACE::asInterface(obj); } DECLARE_META_INTERFACE(ServiceManager) IMPLEMENT_META_INTERFACE(ServiceManager, "android.os.IServiceManager" ); #define DECLARE_META_INTERFACE(INTERFACE) \ static const ::android::String16 descriptor; \ static ::android::sp<I##INTERFACE> asInterface( \ const ::android::sp<::android::IBinder>& obj); \ virtual const ::android::String16& getInterfaceDescriptor () const ; \ I##INTERFACE(); \ virtual ~I##INTERFACE(); \ #define IMPLEMENT_META_INTERFACE(INTERFACE, NAME) \ const ::android::String16 I##INTERFACE::descriptor(NAME); \ const ::android::String16& \ I##INTERFACE::getInterfaceDescriptor() const { \ return I##INTERFACE::descriptor; \ } \ ::android::sp<I##INTERFACE> I##INTERFACE::asInterface( \ const ::android::sp<::android::IBinder>& obj) \ { \ ::android::sp<I##INTERFACE> intr; \ if (obj != NULL ) { \ intr = static_cast <I##INTERFACE*>( \ obj->queryLocalInterface( \ I##INTERFACE::descriptor).get()); \ if (intr == NULL ) { \ intr = new Bp##INTERFACE(obj); \ } \ } \ return intr; \ } \ I##INTERFACE::I##INTERFACE() { } \ I##INTERFACE::~I##INTERFACE() { } \

由上可知,IServiceManager::asInterface()等价于new BpServiceManager(),在这里是new BpServiceManager(BpBinder)。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 class BpServiceManager :public BpInterface<IServiceManager> { }inline BpInterface<INTERFACE>::BpInterface(const sp<IBinder>& remote) : BpRefBase(remote) { }BpRefBase::BpRefBase(const sp<IBinder>& o) : mRemote(o.get()), mRefs(NULL ), mState(0 ) { extendObjectLifetime(OBJECT_LIFETIME_WEAK); if (mRemote) { mRemote->incStrong(this ); mRefs = mRemote->createWeak(this ); } }

小结 defaultServiceManager等价于new BpServiceManager(new BpBinder(0))。

ProcessState::self()主要工作:

调用open(),打开/dev/binder驱动设备;

再利用mmap(),创建大小为1M-8K的内存地址空间;

设定当前进程最大的最大并发Binder线程个数为16。

BpServiceManager巧妙将通信层与业务层逻辑合为一体,

通过继承接口IServiceManager实现了接口中的业务逻辑函数;

通过成员变量mRemote = new BpBinder(0)进行Binder通信工作;

BpBinder通过handler来指向所对应BBinder, 在整个Binder系统中handle=0代表ServiceManager所对应的BBinder。

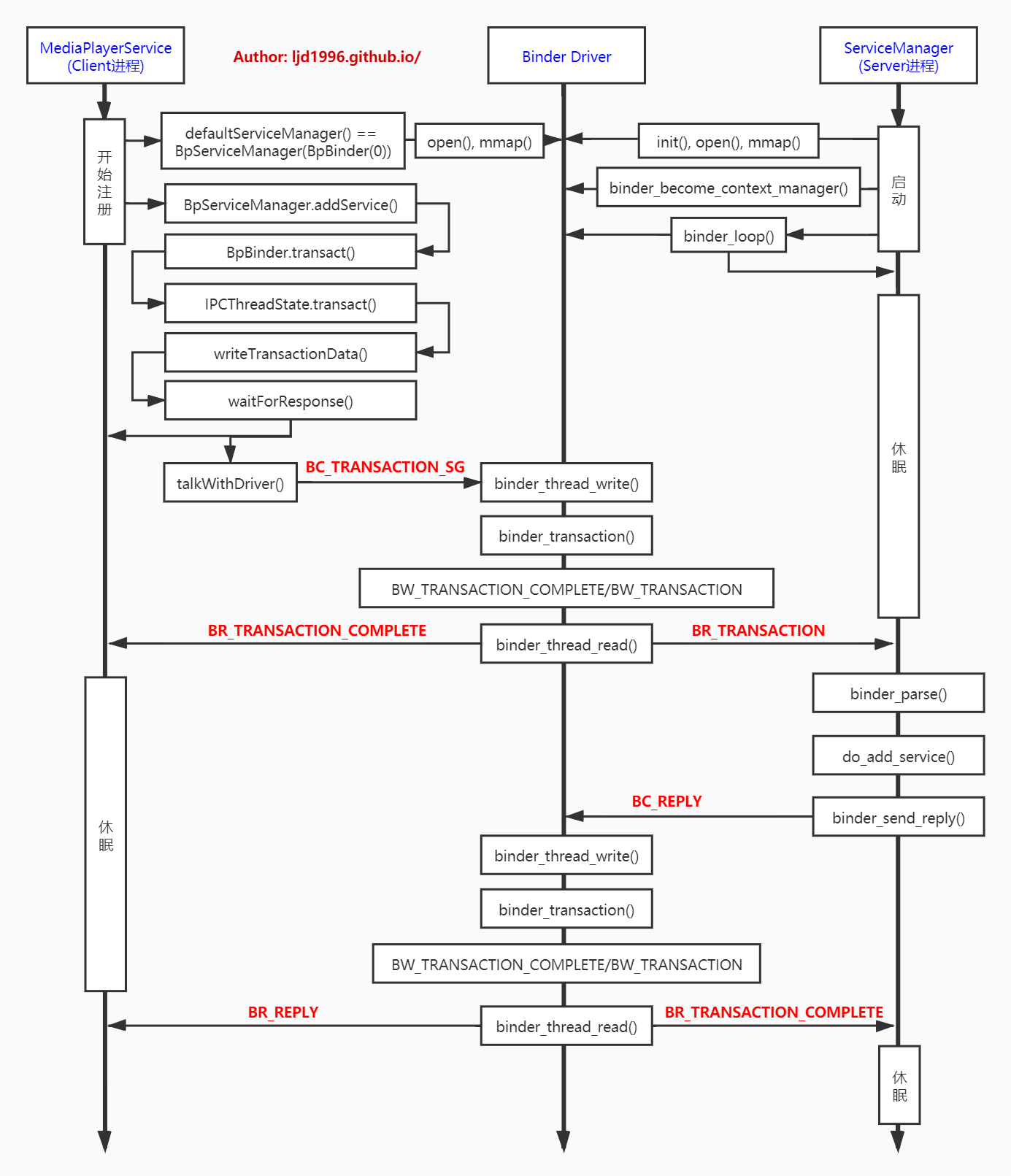

注册服务(addService) 接下来以Media服务为例,讲解如何向ServiceManager注册Native层的服务。

入口 Media入口函数是main_mediaserver.cpp中的main()方法,代码如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 int main (int argc __unused, char **argv __unused) signal(SIGPIPE, SIG_IGN); sp<ProcessState> proc (ProcessState::self()) ; sp<IServiceManager> sm (defaultServiceManager()) ; InitializeIcuOrDie(); MediaPlayerService::instantiate(); ResourceManagerService::instantiate(); registerExtensions(); ProcessState::self()->startThreadPool(); IPCThreadState::self()->joinThreadPool(); }

Client:服务注册 BpServiceManager.addService 注册服务MediaPlayerService:由defaultServiceManager()返回的是BpServiceManager,同时会创建ProcessState对象和BpBinder对象。故此处等价于调用BpServiceManager->addService。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 void MediaPlayerService::instantiate () defaultServiceManager()->addService(String16("media.player" ), new MediaPlayerService()); } class IServiceManager :public IInterface{ virtual sp<IBinder> getService ( const String16& name) const 0 ; virtual sp<IBinder> checkService ( const String16& name) const 0 ; virtual status_t addService (const String16& name, const sp<IBinder>& service, bool allowIsolated = false , int dumpsysFlags = DUMP_FLAG_PRIORITY_DEFAULT) 0 ;} virtual status_t addService (const String16& name, const sp<IBinder>& service, bool allowIsolated, int dumpsysPriority) Parcel data, reply; data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor()); data.writeString16(name); data.writeStrongBinder(service); data.writeInt32(allowIsolated ? 1 : 0 ); data.writeInt32(dumpsysPriority); status_t err = remote()->transact(ADD_SERVICE_TRANSACTION, data, &reply); return err == NO_ERROR ? reply.readExceptionCode() : err; } status_t Parcel::writeStrongBinder (const sp<IBinder>& val) return flatten_binder(ProcessState::self(), val, this ); } BBinder* IBinder::localBinder () return NULL ; } BBinder* BBinder::localBinder () return this ; } BpBinder* BpBinder::remoteBinder () return this ; } BpBinder* IBinder::remoteBinder () return NULL ; } status_t flatten_binder (const sp<ProcessState>& , const sp<IBinder>& binder, Parcel* out) flat_binder_object obj; if (binder != NULL ) { IBinder *local = binder->localBinder(); if (!local) { BpBinder *proxy = binder->remoteBinder(); if (proxy == NULL ) { ALOGE("null proxy" ); } const int32_t handle = proxy ? proxy->handle() : 0 ; obj.hdr.type = BINDER_TYPE_HANDLE; obj.binder = 0 ; obj.handle = handle; obj.cookie = 0 ; } else { obj.hdr.type = BINDER_TYPE_BINDER; obj.binder = reinterpret_cast <uintptr_t >(local->getWeakRefs()); obj.cookie = reinterpret_cast <uintptr_t >(local); } } else { } return finish_flatten_binder(binder, obj, out); } inline static status_t finish_flatten_binder ( const sp<IBinder>& , const flat_binder_object& flat, Parcel* out) return out->writeObject(flat, false ); }

BpBinder.transact 1 2 3 4 5 6 7 8 9 10 11 12 status_t BpBinder::transact ( uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags) if (mAlive) { status_t status = IPCThreadState::self()->transact(mHandle, code, data, reply, flags); if (status == DEAD_OBJECT) mAlive = 0 ; return status; } return DEAD_OBJECT; }

Binder代理类调用transact()方法,真正工作还是交给IPCThreadState来进行transact工作。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 IPCThreadState* IPCThreadState::self () if (gHaveTLS) { restart: const pthread_key_t k = gTLS; IPCThreadState* st = (IPCThreadState*)pthread_getspecific(k); if (st) return st; return new IPCThreadState; } if (gShutdown) { ALOGW("Calling IPCThreadState::self() during shutdown is dangerous, expect a crash.\n" ); return NULL ; } pthread_mutex_lock(&gTLSMutex); if (!gHaveTLS) { int key_create_value = pthread_key_create(&gTLS, threadDestructor); if (key_create_value != 0 ) { pthread_mutex_unlock(&gTLSMutex); return NULL ; } gHaveTLS = true ; } pthread_mutex_unlock(&gTLSMutex); goto restart; }

TLS是指Thread local storage(线程本地储存空间),每个线程都拥有自己的TLS,并且是私有空间,线程之间不会共享。上面方法是从线程本地存储空间中获得保存在其中的IPCThreadState对象。

1 2 3 4 5 6 7 8 9 10 11 12 13 IPCThreadState::IPCThreadState() : mProcess(ProcessState::self()), mMyThreadId(gettid()), mStrictModePolicy(0 ), mLastTransactionBinderFlags(0 ), mIsLooper(false ), mIsPollingThread(false ) { pthread_setspecific(gTLS, this ); clearCaller(); mIn.setDataCapacity(256 ); mOut.setDataCapacity(256 ); (void )mMyThreadId; }

每个线程都有一个IPCThreadState,每个IPCThreadState中都有一个mIn、一个mOut。成员变量mProcess保存了ProcessState变量(每个进程只有一个)。

mIn用来接收来自Binder驱动的数据,默认大小为256字节;

mOut用来存储发往Binder驱动的数据,默认大小为256字节。

IPCThreadState.transact 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 status_t IPCThreadState::transact (int32_t handle, uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags) status_t err; err = writeTransactionData(BC_TRANSACTION_SG, flags, handle, code, data, NULL ); if (err != NO_ERROR) { if (reply) reply->setError(err); return (mLastError = err); } if ((flags & TF_ONE_WAY) == 0 ) { if (reply) { err = waitForResponse(reply); } else { Parcel fakeReply; err = waitForResponse(&fakeReply); } } else { err = waitForResponse(NULL , NULL ); } return err; }

IPCThreadState.writeTransactionData 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 status_t IPCThreadState::writeTransactionData (int32_t cmd, uint32_t binderFlags, int32_t handle, uint32_t code, const Parcel& data, status_t * statusBuffer) binder_transaction_data_sg tr_sg; tr_sg.transaction_data.target.ptr = 0 ; tr_sg.transaction_data.target.handle = handle; tr_sg.transaction_data.code = code; tr_sg.transaction_data.flags = binderFlags; tr_sg.transaction_data.cookie = 0 ; tr_sg.transaction_data.sender_pid = 0 ; tr_sg.transaction_data.sender_euid = 0 ; const status_t err = data.errorCheck(); if (err == NO_ERROR) { tr_sg.transaction_data.data_size = data.ipcDataSize(); tr_sg.transaction_data.data.ptr.buffer = data.ipcData(); tr_sg.transaction_data.offsets_size = data.ipcObjectsCount()*sizeof (binder_size_t ); tr_sg.transaction_data.data.ptr.offsets = data.ipcObjects(); tr_sg.buffers_size = data.ipcBufferSize(); } else if (statusBuffer) { tr_sg.transaction_data.flags |= TF_STATUS_CODE; *statusBuffer = err; tr_sg.transaction_data.data_size = sizeof (status_t ); tr_sg.transaction_data.data.ptr.buffer = reinterpret_cast <uintptr_t >(statusBuffer); tr_sg.transaction_data.offsets_size = 0 ; tr_sg.transaction_data.data.ptr.offsets = 0 ; tr_sg.buffers_size = 0 ; } else { return (mLastError = err); } mOut.writeInt32(cmd); mOut.write(&tr_sg, sizeof (tr_sg)); return NO_ERROR; }

writeTransactionData函数中handle的值用来标识目的端,注册服务过程的目的端为service manager,此处handle=0所对应的是binder_context_mgr_node对象,正是service manager所对应的binder实体对象。

binder_transaction_data_sg结构体是binder驱动通信的数据结构,该过程最终是把Binder请求码BC_TRANSACTION_SG和binder_transaction_data_sg结构体写入到mOut。

IPCThreadState.waitForResponse 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 status_t IPCThreadState::waitForResponse (Parcel *reply, status_t *acquireResult) uint32_t cmd; int32_t err; while (1 ) { if ((err=talkWithDriver()) < NO_ERROR) break ; err = mIn.errorCheck(); if (err < NO_ERROR) break ; if (mIn.dataAvail() == 0 ) continue ; cmd = (uint32_t )mIn.readInt32(); switch (cmd) { case BR_TRANSACTION_COMPLETE: case BR_DEAD_REPLY: case BR_FAILED_REPLY: case BR_ACQUIRE_RESULT: case BR_REPLY: default : err = executeCommand(cmd); if (err != NO_ERROR) goto finish; break ; } } return err; } status_t IPCThreadState::talkWithDriver (bool doReceive) if (mProcess->mDriverFD <= 0 ) { return -EBADF; } binder_write_read bwr; const bool needRead = mIn.dataPosition() >= mIn.dataSize(); const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0 ; bwr.write_size = outAvail; bwr.write_buffer = (uintptr_t )mOut.data(); if (doReceive && needRead) { bwr.read_size = mIn.dataCapacity(); bwr.read_buffer = (uintptr_t )mIn.data(); } else { bwr.read_size = 0 ; bwr.read_buffer = 0 ; } if ((bwr.write_size == 0 ) && (bwr.read_size == 0 )) return NO_ERROR; bwr.write_consumed = 0 ; bwr.read_consumed = 0 ; status_t err; do { if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0 ) err = NO_ERROR; } while (err == -EINTR); return err; }

Binder Driver 由Binder驱动原理可知,主要在binder_thread_write和binder_thread_read函数中处理Binder数据。而对于请求码为BC_TRANSACTION/BC_REPLY/BC_TRANSACTION_SG/BC_REPLY_SG时,会执行binder_transaction()方法处理,由之前可知,注册服务传递的是BBinder对象,故上面的writeStrongBinder()过程中localBinder不为空,从而flat_binder_object.type等于BINDER_TYPE_BINDER:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 static void binder_transaction (struct binder_proc *proc, struct binder_thread *thread, struct binder_transaction_data *tr, int reply, binder_size_t extra_buffers_size) switch (hdr->type) { case BINDER_TYPE_BINDER: case BINDER_TYPE_WEAK_BINDER: { struct flat_binder_object *fp ; fp = to_flat_binder_object(hdr); ret = binder_translate_binder(fp, t, thread); binder_alloc_copy_to_buffer(&target_proc->alloc, t->buffer, object_offset, fp, sizeof (*fp)); } break ; } } static int binder_translate_binder (struct flat_binder_object *fp, struct binder_transaction *t, struct binder_thread *thread) struct binder_node *node ; struct binder_proc *proc = thread ->proc ; struct binder_proc *target_proc = t ->to_proc ; struct binder_ref_data rdata ; int ret = 0 ; node = binder_get_node(proc, fp->binder); if (!node) { node = binder_new_node(proc, fp); if (!node) return -ENOMEM; } ret = binder_inc_ref_for_node(target_proc, node, fp->hdr.type == BINDER_TYPE_BINDER, &thread->todo, &rdata); if (ret) goto done; if (fp->hdr.type == BINDER_TYPE_BINDER) fp->hdr.type = BINDER_TYPE_HANDLE; else fp->hdr.type = BINDER_TYPE_WEAK_HANDLE; fp->binder = 0 ; fp->handle = rdata.desc; fp->cookie = 0 ; done: binder_put_node(node); return ret; }

服务注册过程是在服务所在进程创建binder_node,在servicemanager进程创建binder_ref。对于同一个binder_node,每个进程只会创建一个binder_ref对象。

其中handle值计算方法规律:

每个进程binder_proc所记录的binder_ref的handle值是从1开始递增的;

所有进程binder_proc所记录的handle=0的binder_ref都指向service manager;

同一个服务的binder_node在不同进程的binder_ref的handle值可以不同;

Server:ServiceManager进程 ServiceManager进程中的binder_loop()循环会一直读Binder驱动的数据,然后在binder_parse()方法中解析,然后调用do_add_service方法注册服务。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 status_t IPCThreadState::waitForResponse (Parcel *reply, status_t *acquireResult) uint32_t cmd; int32_t err; while (1 ) { if ((err=talkWithDriver()) < NO_ERROR) break ; err = mIn.errorCheck(); if (err < NO_ERROR) break ; if (mIn.dataAvail() == 0 ) continue ; cmd = (uint32_t )mIn.readInt32(); switch (cmd) { case BR_TRANSACTION_COMPLETE: if (!reply && !acquireResult) goto finish; break ; case BR_DEAD_REPLY: case BR_FAILED_REPLY: case BR_ACQUIRE_RESULT: case BR_REPLY: { binder_transaction_data tr; err = mIn.read(&tr, sizeof (tr)); ALOG_ASSERT(err == NO_ERROR, "Not enough command data for brREPLY" ); if (err != NO_ERROR) goto finish; if (reply) { if ((tr.flags & TF_STATUS_CODE) == 0 ) { reply->ipcSetDataReference( reinterpret_cast <const uint8_t *>(tr.data.ptr.buffer), tr.data_size, reinterpret_cast <const binder_size_t *>(tr.data.ptr.offsets), tr.offsets_size/sizeof (binder_size_t ), freeBuffer, this ); } else { err = *reinterpret_cast <const status_t *>(tr.data.ptr.buffer); freeBuffer(NULL , reinterpret_cast <const uint8_t *>(tr.data.ptr.buffer), tr.data_size, reinterpret_cast <const binder_size_t *>(tr.data.ptr.offsets), tr.offsets_size/sizeof (binder_size_t ), this ); } } else { freeBuffer(NULL , reinterpret_cast <const uint8_t *>(tr.data.ptr.buffer), tr.data_size, reinterpret_cast <const binder_size_t *>(tr.data.ptr.offsets), tr.offsets_size/sizeof (binder_size_t ), this ); continue ; } } goto finish; default : err = executeCommand(cmd); if (err != NO_ERROR) goto finish; break ; } } return err; }

小结 Media服务注册的过程涉及到MediaPlayerService(作为Client进程)和Service Manager(作为Service进程)。

服务注册过程(addService)核心功能:在服务所在进程创建binder_node,在servicemanager进程创建binder_ref。

获取服务(getService) 以Native层的Media服务获取为例。

Client:发起进程 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 const sp<IMediaPlayerService> IMediaDeathNotifier::getMediaPlayerService () Mutex::Autolock _l(sServiceLock); if (sMediaPlayerService == 0 ) { sp<IServiceManager> sm = defaultServiceManager(); sp<IBinder> binder; do { binder = sm->getService(String16("media.player" )); if (binder != 0 ) { break ; } usleep(500000 ); } while (true ); if (sDeathNotifier == NULL ) { sDeathNotifier = new DeathNotifier(); } binder->linkToDeath(sDeathNotifier); sMediaPlayerService = interface_cast<IMediaPlayerService>(binder); } return sMediaPlayerService; }

BpServiceManager.getService 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 virtual sp<IBinder> getService (const String16& name) const sp<IBinder> svc = checkService(name); if (svc != NULL ) return svc; const bool isVendorService = strcmp (ProcessState::self()->getDriverName().c_str(), "/dev/vndbinder" ) == 0 ; const long timeout = uptimeMillis() + 5000 ; if (!gSystemBootCompleted) { char bootCompleted[PROPERTY_VALUE_MAX]; property_get("sys.boot_completed" , bootCompleted, "0" ); gSystemBootCompleted = strcmp (bootCompleted, "1" ) == 0 ? true : false ; } const long sleepTime = gSystemBootCompleted ? 1000 : 100 ; int n = 0 ; while (uptimeMillis() < timeout) { n++; if (isVendorService) { ALOGI("Waiting for vendor service %s..." , String8(name).string ()); CallStack stack (LOG_TAG) ; } else if (n%10 == 0 ) { ALOGI("Waiting for service %s..." , String8(name).string ()); } usleep(1000 *sleepTime); sp<IBinder> svc = checkService(name); if (svc != NULL ) return svc; } ALOGW("Service %s didn't start. Returning NULL" , String8(name).string ()); return NULL ; } virtual sp<IBinder> checkService ( const String16& name) const Parcel data, reply; data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor()); data.writeString16(name); remote()->transact(CHECK_SERVICE_TRANSACTION, data, &reply); return reply.readStrongBinder(); }

接下来调用BpBinder的transact方法,逻辑跟注册Service时类似,最后会进入Binder驱动。

Binder Driver 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 static void binder_transaction (struct binder_proc *proc, struct binder_thread *thread, struct binder_transaction_data *tr, int reply, binder_size_t extra_buffers_size) for (buffer_offset = off_start_offset; buffer_offset < off_end_offset; buffer_offset += sizeof (binder_size_t )) { switch (hdr->type) { case BINDER_TYPE_HANDLE: case BINDER_TYPE_WEAK_HANDLE: { struct flat_binder_object *fp ; fp = to_flat_binder_object(hdr); ret = binder_translate_handle(fp, t, thread); binder_alloc_copy_to_buffer(&target_proc->alloc, t->buffer, object_offset, fp, sizeof (*fp)); } break ; } } } static int binder_translate_handle (struct flat_binder_object *fp, struct binder_transaction *t, struct binder_thread *thread) struct binder_proc *proc = thread ->proc ; struct binder_proc *target_proc = t ->to_proc ; struct binder_node *node ; struct binder_ref_data src_rdata ; int ret = 0 ; node = binder_get_node_from_ref(proc, fp->handle, fp->hdr.type == BINDER_TYPE_HANDLE, &src_rdata); if (!node) { binder_user_error("%d:%d got transaction with invalid handle, %d\n" , proc->pid, thread->pid, fp->handle); return -EINVAL; } if (security_binder_transfer_binder(proc->tsk, target_proc->tsk)) { ret = -EPERM; goto done; } binder_node_lock(node); if (node->proc == target_proc) { if (fp->hdr.type == BINDER_TYPE_HANDLE) fp->hdr.type = BINDER_TYPE_BINDER; else fp->hdr.type = BINDER_TYPE_WEAK_BINDER; fp->binder = node->ptr; fp->cookie = node->cookie; if (node->proc) binder_inner_proc_lock(node->proc); binder_inc_node_nilocked(node, fp->hdr.type == BINDER_TYPE_BINDER, 0 , NULL ); if (node->proc) binder_inner_proc_unlock(node->proc); trace_binder_transaction_ref_to_node(t, node, &src_rdata); binder_debug(BINDER_DEBUG_TRANSACTION, " ref %d desc %d -> node %d u%016llx\n" , src_rdata.debug_id, src_rdata.desc, node->debug_id, (u64)node->ptr); binder_node_unlock(node); } else { struct binder_ref_data dest_rdata; binder_node_unlock(node); ret = binder_inc_ref_for_node(target_proc, node, fp->hdr.type == BINDER_TYPE_HANDLE, NULL , &dest_rdata); if (ret) goto done; fp->binder = 0 ; fp->handle = dest_rdata.desc; fp->cookie = 0 ; trace_binder_transaction_ref_to_ref(t, node, &src_rdata, &dest_rdata); } done: binder_put_node(node); return ret; }

这里分两种情况:

当请求服务的进程与服务属于不同进程,则为请求服务所在进程创建binder_ref对象,指向服务进程中的binder_node;

当请求服务的进程与服务属于同一进程,则不再创建新对象,只是引用计数加1,并且修改type为BINDER_TYPE_BINDER或BINDER_TYPE_WEAK_BINDER。

Server:ServiceManager进程 与注册服务类似,ServiceManager进程会循环调用binder_parse解析Binder驱动中的数据,然后调用svcmgr_handler方法:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 int svcmgr_handler (struct binder_state *bs, struct binder_transaction_data *txn, struct binder_io *msg, struct binder_io *reply) struct svcinfo *si ; uint16_t *s; size_t len; uint32_t handle; uint32_t strict_policy; int allow_isolated; uint32_t dumpsys_priority; if (txn->target.ptr != BINDER_SERVICE_MANAGER) return -1 ; if (txn->code == PING_TRANSACTION) return 0 ; strict_policy = bio_get_uint32(msg); s = bio_get_string16(msg, &len); if (s == NULL ) { return -1 ; } if ((len != (sizeof (svcmgr_id) / 2 )) || memcmp (svcmgr_id, s, sizeof (svcmgr_id))) { fprintf (stderr ,"invalid id %s\n" , str8(s, len)); return -1 ; } switch (txn->code) { case SVC_MGR_GET_SERVICE: case SVC_MGR_CHECK_SERVICE: s = bio_get_string16(msg, &len); if (s == NULL ) { return -1 ; } handle = do_find_service(s, len, txn->sender_euid, txn->sender_pid); if (!handle) break ; bio_put_ref(reply, handle); return 0 ; } bio_put_uint32(reply, 0 ); return 0 ; }

Client:readStrongBinder 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 status_t Parcel::readStrongBinder (sp<IBinder>* val) const status_t status = readNullableStrongBinder(val); if (status == OK && !val->get()) { status = UNEXPECTED_NULL; } return status; } status_t Parcel::readNullableStrongBinder (sp<IBinder>* val) const return unflatten_binder(ProcessState::self(), *this , val); } status_t unflatten_binder (const sp<ProcessState>& proc, const Parcel& in, sp<IBinder>* out) const flat_binder_object* flat = in.readObject(false ); if (flat) { switch (flat->hdr.type) { case BINDER_TYPE_BINDER: *out = reinterpret_cast <IBinder*>(flat->cookie); return finish_unflatten_binder(NULL , *flat, in); case BINDER_TYPE_HANDLE: *out = proc->getStrongProxyForHandle(flat->handle); return finish_unflatten_binder(static_cast <BpBinder*>(out->get()), *flat, in); } } return BAD_TYPE; }

小结 请求服务(getService)过程就是向servicemanager进程查询指定服务,当执行binder_transaction()时,会区分请求服务所属进程情况:

当请求服务的进程与服务属于不同进程,则为请求服务所在进程创建binder_ref对象,指向服务进程中的binder_node,最终readStrongBinder()返回的是BpBinder对象;

当请求服务的进程与服务属于同一进程,则不再创建新对象,只是引用计数加1,并且修改type为BINDER_TYPE_BINDER或BINDER_TYPE_WEAK_BINDER,最终readStrongBinder()返回的是BBinder对象的真实子类。

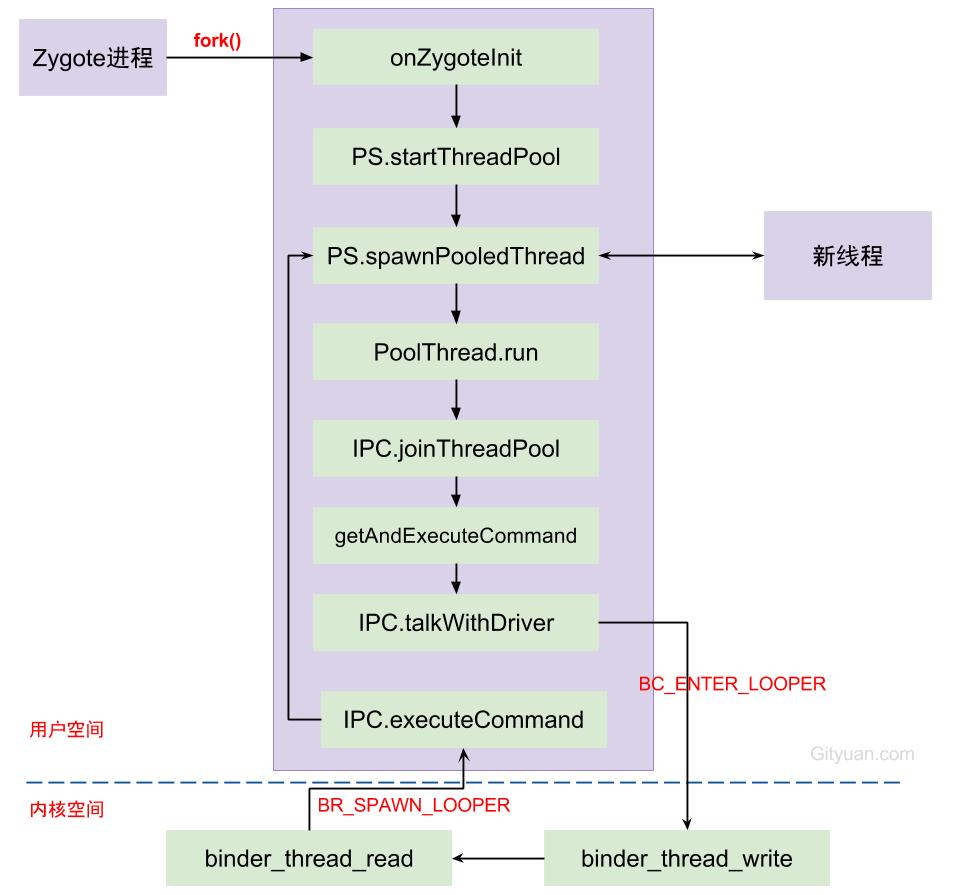

Binder线程池 入口 system_server进程和app进程都是在进程fork完成后,在新进程中调用ZygoteInit.zygoteInit()启动binder线程池。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 public static final Runnable zygoteInit (int targetSdkVersion, String[] argv, ClassLoader classLoader) RuntimeInit.redirectLogStreams(); RuntimeInit.commonInit(); ZygoteInit.nativeZygoteInit(); return RuntimeInit.applicationInit(targetSdkVersion, argv, classLoader); } int register_com_android_internal_os_ZygoteInit_nativeZygoteInit (JNIEnv* env) const JNINativeMethod methods[] = {{ "nativeZygoteInit" , "()V" , (void *) com_android_internal_os_ZygoteInit_nativeZygoteInit }, }; return jniRegisterNativeMethods(env, "com/android/internal/os/ZygoteInit" , methods, NELEM(methods)); } static void com_android_internal_os_ZygoteInit_nativeZygoteInit (JNIEnv* env, jobject clazz) gCurRuntime->onZygoteInit(); } virtual void onZygoteInit () sp<ProcessState> proc = ProcessState::self(); proc->startThreadPool(); }

ProcessState::self()是单例模式,主要工作是调用open()打开/dev/binder驱动设备,再利用mmap()映射内核的地址空间,将Binder驱动的fd赋值ProcessState对象中的变量mDriverFD,用于交互操作。startThreadPool()是创建一个新的binder线程,不断进行talkWithDriver()。

ProcessState.startThreadPool 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 void ProcessState::startThreadPool () AutoMutex _l(mLock); if (!mThreadPoolStarted) { mThreadPoolStarted = true ; spawnPooledThread(true ); } } void ProcessState::spawnPooledThread (bool isMain) if (mThreadPoolStarted) { String8 name = makeBinderThreadName(); sp<Thread> t = new PoolThread(isMain); t->run(name.string ()); } } String8 ProcessState::makeBinderThreadName () { int32_t s = android_atomic_add(1 , &mThreadPoolSeq); pid_t pid = getpid(); String8 name; name.appendFormat("Binder:%d_%X" , pid, s); return name; }

启动Binder线程池后,则设置mThreadPoolStarted=true,通过变量mThreadPoolStarted来保证每个应用进程只启动一个binder线程池,且本次创建的是binder主线程(isMain=true),其余binder线程池中的线程都是由Binder驱动来控制创建的。

makeBinderThreadName用来获取Binder线程名,格式为Binder:pid_x,其中x为整数,每个进程中的x编码从1开始,依次递增;pid字段可以快速定位该Binder线程所属的进程pid。只有通过spawnPooledThread方法创建的线程才符合这个格式,对于直接将当前线程通过joinThreadPool加入线程池的线程名则不符合这个命名规则。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 class PoolThread :public Thread{ public : explicit PoolThread (bool isMain) : mIsMain (isMain) { } protected : virtual bool threadLoop () { IPCThreadState::self()->joinThreadPool(mIsMain); return false ; } const bool mIsMain; };

从函数名看new PoolThread(isMain)是创建线程池,其实就只是创建一个线程,该PoolThread继承Thread类。t->run()方法最终调用PoolThread的threadLoop()方法。

IPCThreadState.joinThreadPool 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 void IPCThreadState::joinThreadPool (bool isMain) mOut.writeInt32(isMain ? BC_ENTER_LOOPER : BC_REGISTER_LOOPER); status_t result; mIsLooper = true ; do { processPendingDerefs(); result = getAndExecuteCommand(); if (result < NO_ERROR && result != TIMED_OUT && result != -ECONNREFUSED && result != -EBADF) { abort (); } if (result == TIMED_OUT && !isMain) { break ; } } while (result != -ECONNREFUSED && result != -EBADF); mOut.writeInt32(BC_EXIT_LOOPER); mIsLooper = false ; talkWithDriver(false ); }

IPCThreadState.talkWithDriver 1 2 3 4 5 6 7 8 9 10 11 status_t IPCThreadState::getAndExecuteCommand () status_t result; int32_t cmd; result = talkWithDriver(); if (result >= NO_ERROR) { cmd = mIn.readInt32(); result = executeCommand(cmd); } return result; }

在getAndExecuteCommand方法中会先调用talkWithDriver与Binder驱动交互,此处向mOut写入的是BC_ENTER_LOOPER命令。由上面的解析可以知道会调用到Binder驱动。

Binder Driver 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 static int binder_thread_write (struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed) while (ptr < end && thread->return_error.cmd == BR_OK) { switch (cmd) { case BC_REGISTER_LOOPER: binder_inner_proc_lock(proc); if (thread->looper & BINDER_LOOPER_STATE_ENTERED) { thread->looper |= BINDER_LOOPER_STATE_INVALID; } else if (proc->requested_threads == 0 ) { thread->looper |= BINDER_LOOPER_STATE_INVALID; } else { proc->requested_threads--; proc->requested_threads_started++; } thread->looper |= BINDER_LOOPER_STATE_REGISTERED; binder_inner_proc_unlock(proc); break ; case BC_ENTER_LOOPER: if (thread->looper & BINDER_LOOPER_STATE_REGISTERED) { thread->looper |= BINDER_LOOPER_STATE_INVALID; } thread->looper |= BINDER_LOOPER_STATE_ENTERED; break ; case BC_EXIT_LOOPER: thread->looper |= BINDER_LOOPER_STATE_EXITED; break ; } } }

在设置了线程的looper状态为BINDER_LOOPER_STATE_ENTERED后,当该线程有事务需要处理时,Binder驱动会进入binder_thread_read()过程,当满足一定条件时,会创建新的Binder线程。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 static int binder_thread_read (struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed, int non_block) while (1 ) { switch (w->type) { case BINDER_WORK_TRANSACTION: { binder_inner_proc_unlock(proc); t = container_of(w, struct binder_transaction, work); } break ; case BINDER_WORK_CLEAR_DEATH_NOTIFICATION: { if (cmd == BR_DEAD_BINDER) goto done; } } if (!t) continue ; break ; } done: *consumed = ptr - buffer; binder_inner_proc_lock(proc); if (proc->requested_threads == 0 && list_empty(&thread->proc->waiting_threads) && proc->requested_threads_started < proc->max_threads && (thread->looper & (BINDER_LOOPER_STATE_REGISTERED | BINDER_LOOPER_STATE_ENTERED))) { proc->requested_threads++; binder_inner_proc_unlock(proc); if (put_user(BR_SPAWN_LOOPER, (uint32_t __user *)buffer)) return -EFAULT; binder_stat_br(proc, thread, BR_SPAWN_LOOPER); } else binder_inner_proc_unlock(proc); return 0 ; }

当发生以下情况之一,便会进入done:

当前线程发生error等情况;

当Binder驱动向Client端发送死亡通知的情况;

当类型为BINDER_WORK_TRANSACTION(即收到命令是BC_TRANSACTION或BC_REPLY)的情况。

任何一个Binder线程当同时满足以下条件,则会生成用于创建新线程的BR_SPAWN_LOOPER命令:

当前进程中没有请求创建binder线程,即requested_threads = 0;

当前进程没有空闲可用的binder线程,即waiting_threads为空;

当前进程已启动线程个数小于最大上限(默认15);

当前线程已接收到BC_ENTER_LOOPER或者BC_REGISTER_LOOPER命令,即当前处于BINDER_LOOPER_STATE_REGISTERED或者BINDER_LOOPER_STATE_ENTERED状态。

talkWithDriver收到事务之后便进入IPCThreadState.executeCommand()执行命令。

IPCThreadState.executeCommand 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 status_t IPCThreadState::executeCommand (int32_t cmd) BBinder* obj; RefBase::weakref_type* refs; status_t result = NO_ERROR; switch ((uint32_t )cmd) { case BR_ERROR: result = mIn.readInt32(); break ; case BR_OK: break ; case BR_SPAWN_LOOPER: mProcess->spawnPooledThread(false ); break ; default : result = UNKNOWN_ERROR; break ; } return result; }

Binder主线程的创建是在其所在进程创建的过程一起创建的,后面再创建的普通Binder线程是由spawnPooledThread(false)方法所创建的。

小结 默认地,每个进程的binder线程池的线程个数上限为15,该上限不统计通过BC_ENTER_LOOPER命令创建的binder主线程,只计算BC_REGISTER_LOOPER命令创建的线程。例如如果设置了如下代码:

1 2 3 ProcessState::self()->setThreadPoolMaxThreadCount(6 ); ProcessState::self()->startThreadPool(); IPCThread::self()->joinThreadPool();

首先线程池的binder线程个数上限为6个,通过startThreadPool()创建的主线程不算在最大线程上限,最后一句是将当前线程成为binder线程,所以说可创建的binder线程个数上限为8。

Binder设计架构中,只有第一个Binder主线程(也就是Binder_pid_1线程)是由应用程序主动创建,Binder线程池的普通线程都是由Binder驱动根据IPC通信需求创建,Binder线程的创建流程图:

每次由Zygote fork出新进程的过程中,伴随着创建Binder线程池,调用spawnPooledThread来创建Binder主线程。当线程执行binder_thread_read的过程中,发现当前没有空闲线程,没有请求创建线程,且没有达到上限,则创建新的binder线程。

Binder的transaction有3种类型:

call: 发起进程的线程不一定是在Binder线程,大多數情況下,接收者只指向进程,并不确定会有哪个线程来处理,所以不指定线程;

reply: 发起者一定是binder线程,并且接收者线程便是上次call时的发起线程(该线程不一定是binder线程,可以是任意线程);

async: 与call类型差不多,唯一不同的是async是oneway方式不需要回复,发起进程的线程不一定是在Binder线程,接收者只指向进程,并不确定会有哪个线程来处理,所以不指定线程。

Binder系统中可分为3类binder线程:

Binder主线程:进程创建过程会调用startThreadPool()过程中再进入spawnPooledThread(true),来创建Binder主线程。编号从1开始,也就是意味着binder主线程名为binder_pid_1,并且主线程是不会退出的。

Binder普通线程:是由Binder Driver来根据是否有空闲的binder线程来决定是否创建binder线程,回调spawnPooledThread(false),该线程名格式为binder_pid_x;

Binder其他线程:其他线程是指并没有调用spawnPooledThread方法,而是直接调用IPC.joinThreadPool(),将当前线程直接加入binder线程队列。例如:mediaserver和servicemanager的主线程都是binder线程,但system_server的主线程并非binder线程。

总结 以Media服务的注册为例,用一张图解释Media服务(客户端)和ServiceManager(服务端),在Binder驱动的协助下,完成服务注册的过程:

oneway与非oneway:都需要等待Binder Driver的回应消息BR_TRANSACTION_COMPLETE。主要区别在于oneway的通信收到BR_TRANSACTION_COMPLETE则返回,而不会再等待BR_REPLY消息的到来。

如果oneway用于本地调用,则不会有任何影响,调用仍是同步调用。

如果oneway用于远程调用,则不会阻塞,它只是发送事务数据并立即返回。接口的实现最终接收此调用时,是以正常远程调用形式将其作为来自Binder线程池的常规调用进行接收。